TL;DR:

As artificial intelligence advances, researchers need to adapt to new risks and best practices to conduct responsible user research.

- Key ethical concerns include: ingrained bias, data privacy, lack of participant informed consent, environmental impact, and explainability of AI insights

- AI research and synthetic users cannot serve as a complete substitute for human research—AI should be treated as complementary resource

- Teams should invest in research tools that prioritize user trust and give researchers control over AI features

Takeaway: If product teams implement ethical guardrails that evolve with AI tool usage, humans and AI can form effective partnerships that empower researchers and scale insights.

Since the introduction of ChatGPT to the masses, AI-powered tools like Perplexity, Gemini and Claude have been flooding the product world one AI prompt at a time.

Some user researchers have been early-adopters, helming the latest wave of innovation. Others have been more skeptical, calling for consideration of the risks and ethical implications of using AI and LLMs (large language models) in user research.

Whichever side you fall on, AI is here to stay—and that means being informed on how to use it safely, ethically, and efficiently. (That’s where this article comes in.)

How AI ethics impact UX research

The undebatable truth is that AI helps product teams scale product research and increase efficiency. An AI co-pilot means faster research, more insights, and quicker decisions.

But while 58% of product teams already incorporate AI into their research practice, there are some reluctant to adopt AI. Our Future of Research Report 2025 found the top concerns of using AI were:

- Trust and credibility (67%)

- Ethical and privacy concerns (39%)

- Security concerns (35%)

- Inherent bias (32%)

Other doubts range from AI’s tendency to present false information and experience ‘hallucinations’, to its propensity to churn out biased data—not to mention its massive contribution to the climate crisis.

Want a rundown of the other pros-and-cons of AI vs. human-led user research?

Check out our deep dive into where AI excels, and where human researchers are irreplaceable. Here’s how AI and human researchers stack up against one another.

While justified, these concerns don’t stop AI from evolving—and research workflows with it. As the demand for user insights continues to grow, product teams need to embrace AI-powered tools to accelerate research.

But can you do this while balancing ethics and efficiency?

With the right safeguards in place, AI can amplify the role and expertise of researchers, empowering them to take on more strategic, business-centric projects—without jeopardizing the integrity of user insights.

Because of AI we're moving into more strategic roles which allow us to focus on higher visibility, higher-impact business decisions.

Daniel Soranzo

Lead UX Researcher at GoodRX

Share

Top ethical considerations and best practices when using AI to shape user experience

Just like negating cognitive biases in UX research, integrating AI means understanding the ethical risks, before learning how to counteract them.

Top challenges to address include:

- Bias amplification and reinforcement

- Data privacy and lack of informed consent

- Limited transparency in AI-driven decisions

- Environmental impact of AI processing

Read on for the top ethical conundrums to consider—with best practices and guardrails to prevent them catching you out.

Bias amplification and reinforcement

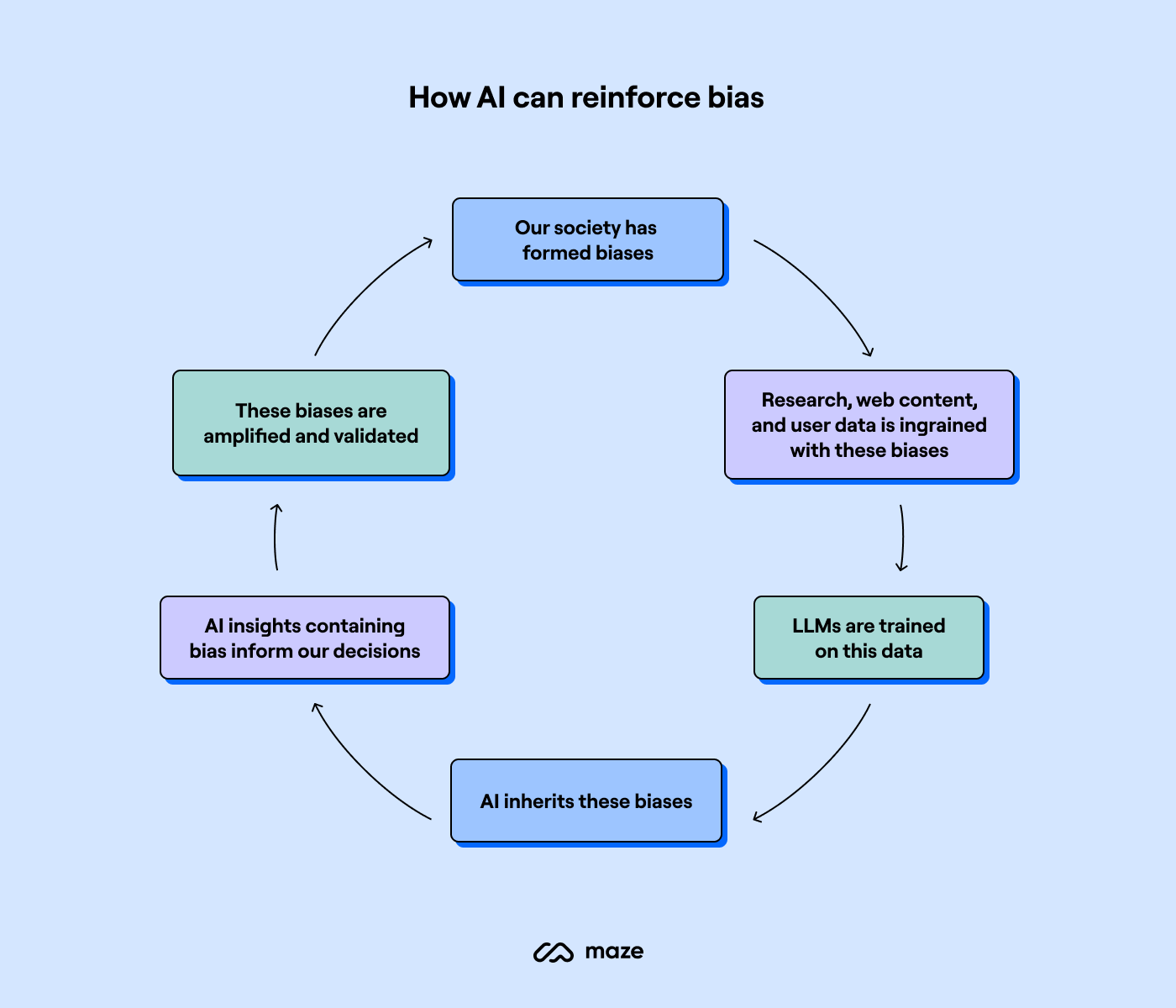

The potential for ingrained bias across generative AI output is a top concern for many researchers.

This isn’t unfounded—AI technology is known for displaying bias. This is because it’s only as good as the data it’s trained on, and since much of our society is fundamentally biased (e.g. eurocentrism, heteronormativity), AI also holds these assumptions.

Think about it like a child repeating their parents’ views even when they don’t understand: AI listens, absorbs, and imitates.

In practice, this can look like AI distorting data—for example, converging user feedback or experiences and skimming off any diverse or surprising perspectives, in order to create a homogenous narrative that ‘fits’ expectation.

Even if you select the correct participants in an inclusive and diverse way, AI will tend to skew the data. You risk disregarding what makes those points of view unique because the more you iterate, the more the uniqueness is stripped out in order to make the feedback converge to a central bias.

Andrea Monti Solza

Researcher & Co-founder of Conveyo

Share

How to combat bias in AI-generated insights

The best way to minimize bias is to incorporate fully inclusive UX design and research practices throughout your wider research workflow. These changes will then trickle down to your AI usage.

This might look like:

- If you’re creating your own GPT, using diverse training data that represents your full user population

- Consciously recruiting from a diverse population for user interviews and usability testing

- Implementing bias-detection as part of your AI research workflow (certain tools like Maze’s The Perfect Question can do this for you)

- Establishing human oversight practices, specifically focused on identifying biases

- Using supplementary human-led research targeting underrepresented groups

Bias in AI is not merely a technical issue but a societal challenge. AI systems are increasingly integrated into decision-making processes in healthcare, hiring, law enforcement, and other critical areas. Therefore, addressing bias is not only about improving technology but also about fostering ethical responsibility and social justice.

Chapman University on AI bias

Share

Data privacy and lack of informed consent

One of the most frequent hesitations around AI in UX research is data privacy and storage—a concern intrinsically intertwined with participant consent.

Understanding the ins-and-outs of how user research tools process and retain your information is tricky at the best of times, but when you introduce GPTs, LLMs and third-party data-processing jargon… it all gets a bit overwhelming.

Especially if you’re a research participant just looking for the box that says ‘Accept Ts&Cs’.

This opacity of AI systems (called the ‘black box problem’) means research participants can’t clearly understand how their data is used—and therefore can’t fully consent.

Other concerns over how AI may use data include:

- Privacy invasion and profiling: AI can infer personally identifiable information (PII), such as age, income, health, ethnicity—even from seemingly-unrelated data

- Hidden scope creep: Data collection may be for one purpose, but later reused (often in a very different way), or shared with one group then passed to another

- Training data: Users may incidentally consent to their data being used to train AI models, leading to extensive usage and sharing of personal data

- Difficulty retracting consent: Once data is fed to AI it is challenging to track and fully delete from the system—meaning altering your consent or retracting information is near impossible

- Data storage and sharing: User information can be handed between systems, passed to third-party apps, or even bought and sold between LLMs; making it hard to know exactly who you’re sharing it with

One new consideration for product teams is where consent falls when users are interacting with AI. After Reddit’s run-in with researchers deploying AI bots posing as people, product teams must make a conscious effort to over-communicate when users are speaking with AI—and clearly gain consent before doing so.

You have a lot of responsibility in how you communicate what’s going on with your users. If you’re inconsiderate of what it actually feels like using that product, you’re at risk of creating a black box.

Peter Kildegaard

Senior Product Manager at Maze

Share

How to handle consent and data storage with third-party AI tools

While AI has changed what users need to consent to—and the due diligence product teams must have with third-party tools—many standard user research best practices remain the same.

Data privacy with AI testing isn’t significantly different from manual research, as long as data isn’t being used for training the models. The same principles exist: you need to make sure you get consent, and that the data isn’t being used for unwanted purposes.

Peter Kildegaard

Senior Product Manager at Maze

Share

Informed consent means ensuring individuals understand risks, benefits, and implications of their consent before giving it.

To minimize the risk to your users, you should:

- Ask for consent at the start and end of a study, once users know what’s been discussed

- Be transparent about AI’s role in your UX research process: what data is used, who it’s shared with, how it’s processed, and potential future uses

- Ensure user data is not being used to train LLMs—or explicitly state if it is

- Update consent forms and Ts&Cs to explicitly mention AI analysis, interview transcription, data processing, and third-party tools

- Avoid blanket or broad consent statements—these miss potential downstream uses or data repurposing

- Maximize explainability by using plain language and avoiding technical jargon

- Create variable consent levels with options allowing participants to opt-in/out of specific AI usage (e.g. AI thematic analysis or future repurposing from a UX research repository), while still participating in the study

- Protect vulnerable users by implementing participation safeguards for sensitive groups, topics, or PII

- Seek new consent if data will be used beyond the original scope

Making sure your users understand exactly what they’re agreeing to is critical for ethical user research. If all of the above isn’t communicated, consent becomes misleading at best—or, at worst, coercive.

Limited transparency in AI-driven decisions

When AI assists in creating research questions, or synthesizing user insights, the line between human and AI-generated content becomes blurred.

These concerns around authenticity and attribution raise questions about intellectual ownership, research integrity, and trustworthiness of takeaways.

We know AI is prone to hallucinations, so how can researchers rely on AI-generated insights?

How to cultivate trust with AI-generated insights

Any good researcher is skeptical. They want traceability and reliability. So how can you support your team in assuring authentic, quality insights?

- Opt for AI user research tools that prioritize trust and provide trackable insights

- Maintain clear documentation of AI’s role in every stage of the user testing process

- Create attribution guidelines to ensure transparency in UX research reports by labeling AI versus human-derived insights

- Establish review processes for verifying AI-generated insights

- Create your own GPT with integrated accountability protocols and evaluation criteria

How the product team at Maze builds trust with AI

Peter Kildegaard, Senior Product Manager at Maze:

There are two key things we do to ensure trust:

1. Put the right guardrails in for AI to be ethically-responsible

2. Ensure the AI is as performant as possible

At Maze, we have very deliberate instructions we’re giving the AI. All these instructions are created through thorough testing with human participants, and bringing in best practices from research experts.

We’re also constantly evaluating the AI’s performance based on evaluation criteria which enables us to spot patterns, adjust prompts, and assess performance. It’s a mix of AI-enabled reviews and manual reviews. For AI reviews, we’re always using another model than the model that performed the research.

The evaluation criteria could be research quality like ‘Did it comprehensively cover the research goal?’ and it could be ethics-related like ‘Did it hallucinate? Did it stay on topic or diverge into areas it shouldn’t be asking about?

Environmental impact of AI processing

It’s impossible to talk about artificial intelligence without also acknowledging the impact AI is having on our environment.

While AI has potential to positively impact on climate change—scientists are already detecting patterns in extreme weather, improving fuel efficiency, and preventatively monitoring pollution—it is by and large having a detrimental effect on the climate crisis.

Just some of the ways AI impacts the environment are:

- Electricity usage

- Predictions for 2026 show AI data centers requiring the same amount of electricity as the entire country of Japan—aka 124 million people

- Training a single AI model uses the same amount of electricity as ~130 US households consume in a year

- One ChatGPT query uses 10x the electricity as one Google search

- Digital chip manufacturers are already using more electricity annually than countries including Australia, Italy, Taiwan and South Africa

- Water consumption

- AI-related infrastructure may soon consume 6x the amount of water used by Denmark—aka 6 million people

- One data center uses as much water in one day as 1,000 US households

- Carbon emissions

- Training a single AI model emits the same carbon emissions as hundreds of US households combined

- Data centers currently account for 2-4% of global greenhouse gas emissions—that’s the same as global aviation

How to counterbalance the environmental impact of using AI

While the responsibility largely sits at a corporate and organizational level, product teams can make a difference. And it’s part of everyone’s responsibility to consider ways to minimize AI’s impact on the environment.

Maze’s CEO & Co-founder, Jo Widawski, explains that climate positive changes shouldn’t be made to counteract using AI—they should already be ingrained in the company.

We don’t really talk about it at Maze, but we give 1% of our global revenue to Stripe for Environment—and we have done for years, even before we invested in AI. This is something we do because we believe in it, it’s not a price to pay for using AI.

Jo Widawski

CEO & Co-founder at Maze

Share

If you’re just starting out and looking to implement everyday practices that guide your team’s AI usage, try these:

- Audit the environmental footprint of your AI tools and opt for tools that offer transparency around sustainable practices

- Optimize AI prompts for research to reduce word count and unnecessary computational load

- Prioritize energy efficiency in other areas: explore renewable energy sources and carbon offsetting strategies as part of research budgets

- Advocate for transparent reporting on AI energy consumption—both internally and by holding other businesses accountable

- Use ‘right-sized’ AI models appropriate to the task’s requirements, rather than defaulting to larger models

- Build out a decision tree framework to decide when AI’s value justifies its impact, and when humans should be the default

- Consider simple ways to go green outside of work: reducing meat and dairy intake, donating to charitable causes, plastic-free swaps, planting trees, litter picking etc.

5 Guardrails for responsible AI research, according to industry experts

You know the risks, you know the best practices, but what are other UX professionals doing? As part of our research building AI moderator, we spoke to a number of product teams and industry experts, from AI enthusiastics to cynics alike.

Our research revealed a number of consistent guardrails teams have put into place to support ethical AI usage that scales research and protects against misinformation.

AI will have a profound impact on the industry. It's the wild west. There are no clear ethical guidelines on how to use AI or when it's acceptable to use it.

Geordie Graham

Senior Manager, User Research at Simplii Financial

Share

1. Supplement, don’t substitute

A common theme was emphasis on AI as a complementary resource, rather than a replacement.

As Jo explains: “The thing that makes research powerful is empathy. If you’re only doing AI-powered studies, it’s easy for the voice of the human to be flattened and lose nonverbal signals that are a defining part of the human experience within a product.”

Similarly, Matthieu Dixte, Senior Product Researcher at Maze, recommends combining AI-driven research with human-led research. For example, using AI to scale quantitative analysis and pattern recognition, while deploying human researchers for instances where empathy matters most.

A methodological monoculture severely limits your understanding. The real question is how to thoughtfully integrate AI to create approaches that leverage the unique strengths of both AI and human researchers.

Matthieu Dixte

Senior Product Researcher at Maze

Share

Treat AI as an iteration tool, process support, or initial starting point, but don’t leave it to do everything unguided. Likewise, if you’re exploring synthetic users, use them to complement the findings from human research participants.

2. Always keep a human in the loop

Another guardrail product teams are relying on is implementing human review processes.

While AI is exceptional at scale and speed, it can return false information or hallucinate. Having a human-in-the-loop is vital to uncover ethical, accurate AI findings.

The most important thing is to remain a critical review of AI’s outputs. If you turn off your critical thinking, then you are no longer the driver of your research and it might not meet your needs. As long as you remain critical, then you can’t over-rely on AI.

Peter Kildegaard

Senior Product Manager at Maze

Share

This ranges from manually fact-checking and verifying each AI output, to incorporating random checks in ongoing AI-powered workflows. Ultimately, it’s up to your team to determine when and how you approach quality assurance.

3. Check data privacy, then check it again

One question product teams must answer is how datasets are passed on to train AI algorithms. Currently a murky area for participant consent, many product teams are firmly against user data being used to train LLMs.

Peter suggests many best practices for processing data don’t differ when it comes to AI usage:

“As long as the data is not being used for training the models, the same principles exist as with regular use of software for research purposes. You need to make sure you own the data, and that the data is not being used for unwanted purposes. Consent and clear communication is vital.”

When it comes to the perception of research participants, some may be put off by knowing AI is processing their data. While this attitude will likely evolve over time, it’s important to meet users where they’re at.

Pick and choose how you use AI, when you use it, and what it has access to. Then communicate all of that with your participants.

I think best practices will have to evolve in the same way we've developed best practices in participant management, ensuring we don't use AI in an unethical way or share misleading data.

Geordie Graham

Senior Manager, User Research at Simplii Financial

Share

4. Help AI succeed: spend time training AI and prompt engineering

AI is only as good as the technology it’s built on—and the prompts you feed it.

Matthieu, who’s been instrumental in implementing behind-the-scenes guardrails for Maze’s AI features, explains his approach:

“I consider AI like a junior assistant researcher. It might lack critical thinking due to inexperience in the role, in the org, or to avoid offending me.”

AI’s effectiveness depends on quality background information, properly-structured data, clear instructions, and examples of the artifacts you want created.

Matthieu Dixte

Senior Product Researcher at Maze

Share

Like Matthieu, many product teams are dedicating time to improving the AI products they work with. If you’ve created your own GPT or have your own account with Claude, ChatGPT, or Gemini, you can help the AI succeed by providing feedback and engineering clear, explicit prompts.

💡 Need a hand with AI prompts?

Here are our top AI prompts for user research, and how to write your own for effective research every time.

5. Lead with trust

One primary guardrail researchers have embedded into their practice is trust.

Trust can mean many things, but when it comes to AI it broadly falls into three categories:

- Trust with your stakeholders

- Trust in your tools

- Trust in your own instincts

Being responsible and building trust means seeing AI in service of the human, and not AI as a replacement of the human. That’s always the philosophy we’ve applied at Maze.

Jo Widawski

CEO & Co-founder at Maze

Share

As Jo says, “research is a practice that has to constantly justify its own existence. If you're putting yourself in the shoes of a researcher who has been running studies for 10 years, they’ve spent so long building trust with their executives.”

Introducing AI tools can feel like handing over that hard-earned credibility to an unvetted counterpart, so it’s vital to align your team, and empower them to balance AI with their own judgement.

One thing you can do is opt for AI tools that equally prioritize trust.

Peter explains: “This is where traceability becomes very important. Your findings should have a direct connection to the source data, so you can easily review the relevancy and correctness of the AI’s insights.”

Ultimately, we care about the human-in-the-loop. It’s about the quality of insights and trust, more than just doing everything as quickly as possible.

Jo Widawski

CEO & Co-founder at Maze

Share

Work to cultivate that trust—within yourself, your tool, your team—then build out from that foundation and integrate ethical AI best practices to maintain stakeholder confidence and organizational trust.

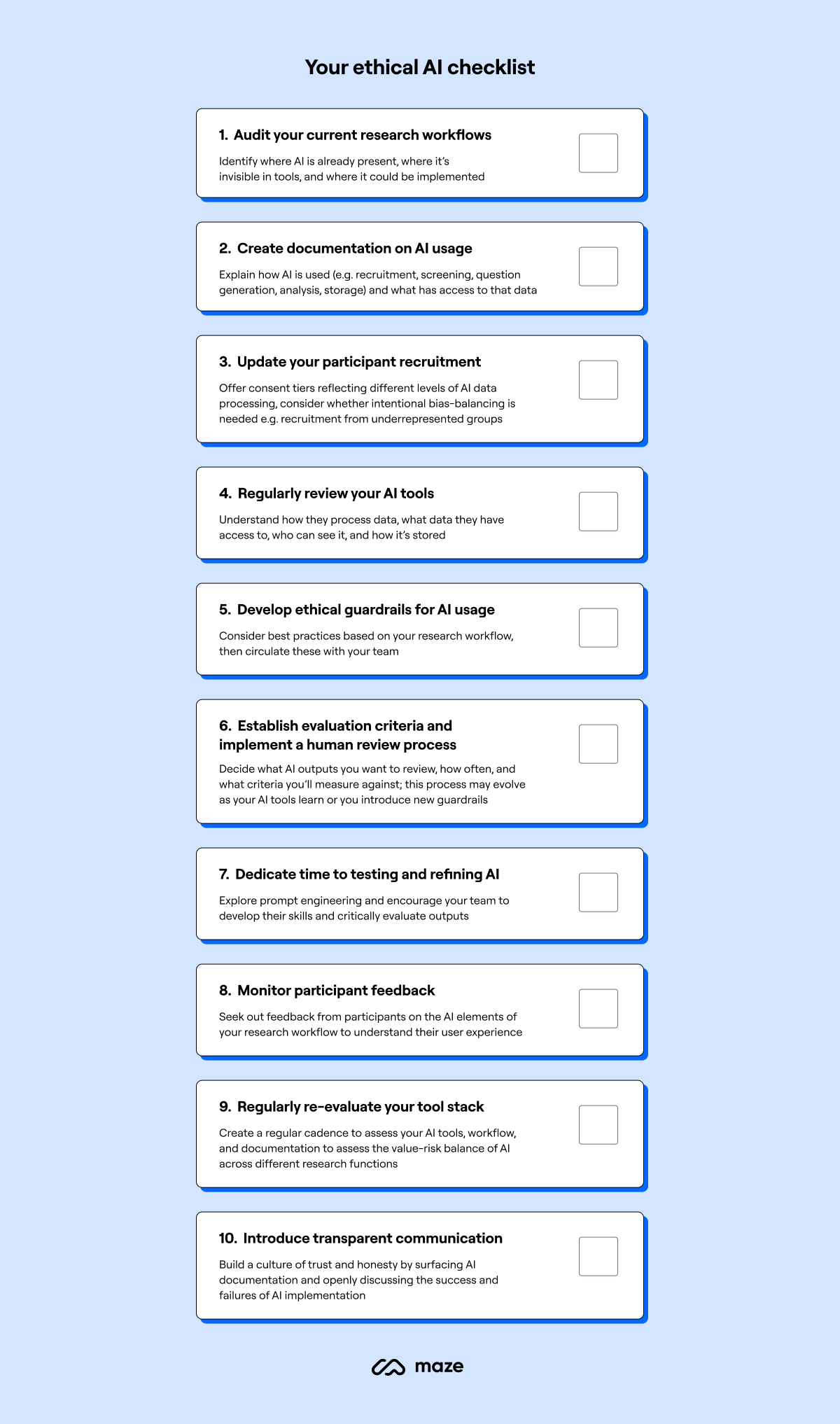

Ethical AI: A checklist for UX research

The best practices and guardrails right for your team and research practice need to come from you, but that doesn’t mean we can’t help guide you to make those calls.

Ethical challenges research teams should prepare for

AI advancements bring exciting possibilities in user research, but they also muddy the ethical and legal responsibilities that researchers face.

As AI tools become more sophisticated and widely-adopted, it’s not just trust within your organization or participant boundaries that need considerations—teams must prepare for more complex, global ethical AI challenges.

Global AI regulations and laws

Current regulations of machine learning are a compliance minefield for researchers, differing hugely between countries.

As strict regulation and enforcement on AI increases, research teams should stay on top of the latest governance.

Here’s the status quo:

- The first-ever legal framework for AI, Europe’s AI Act, came into effect across the European Union in August 2024: The act broadly groups AI use cases by risk, with high-risk instances (e.g. biometric surveillance) requiring registration.

- In the US, while there are no comprehensive federal laws regulating AI, 45 states introduced AI bills in 2024: These range from committees monitoring AI developments, to regulating deepfake technology and criminalizing AI-generated explicit content.

- China has a number of sector-specific regulations dating back to 2017: Most recently in September 2024, the AI Safety Governance Framework launched ethical standards for AI use, while China’s Cyberspace Administration implemented national standards to identify AI-generated content. A proposed Artificial Intelligence Law of the People’s Republic of China could also impose legal restrictions on AI developers, especially for high-risk AI systems.

- Japan’s government introduced AI acts in 2024, with AI Guidelines for Business and Basic Law for the Promotion of Responsible AI both laying out ethical guidance across data security, transparency, privacy and innovation.

- Over 60 countries in the rest of the world have proposed AI-related policies and legal frameworks to address AI safety, including India, Australia, and Saudi Arabia.

Inevitable AI audits

Like any technology dealing with sensitive information, audits will likely arise for many forms of AI—be it on a company, industry, or national level.

Over time, researchers can expect to see best practices, like those outlined above, becoming mandatory through reviews that check AI systems for functionality, transparency, ethics, and legal compliance.

To get ahead, product teams should future-proof AI usage by establishing clear documentation, audit trails, and robust internal processes for any work relating to AI.

Rise of synthetic users

The emergence of synthetic users poses not just an ethical question, but a philosophical one.

While AI-generated personas offer value for large-scale research or early ‘scrappy’ testing, they also counteract the fundamentally human-centered nature of user research.

The majority of researchers see the potential in synthetic users, but endorse them as an addition to human users, rather than a substitute.

Synthetic users can be valuable thought-starters and can help expand our thinking, but they should complement—never replace—research with actual humans. The unique contexts, unexpected behaviors, and nonverbal cues that define real user experiences simply cannot be synthesized.

Matthieu Dixte

Senior Product Researcher at Maze

Share

To prepare, teams should consider whether they would be comfortable using synthetic testers, how to validate AI-driven insights against authentic user behavior, and how to maintain that all-important human connection.

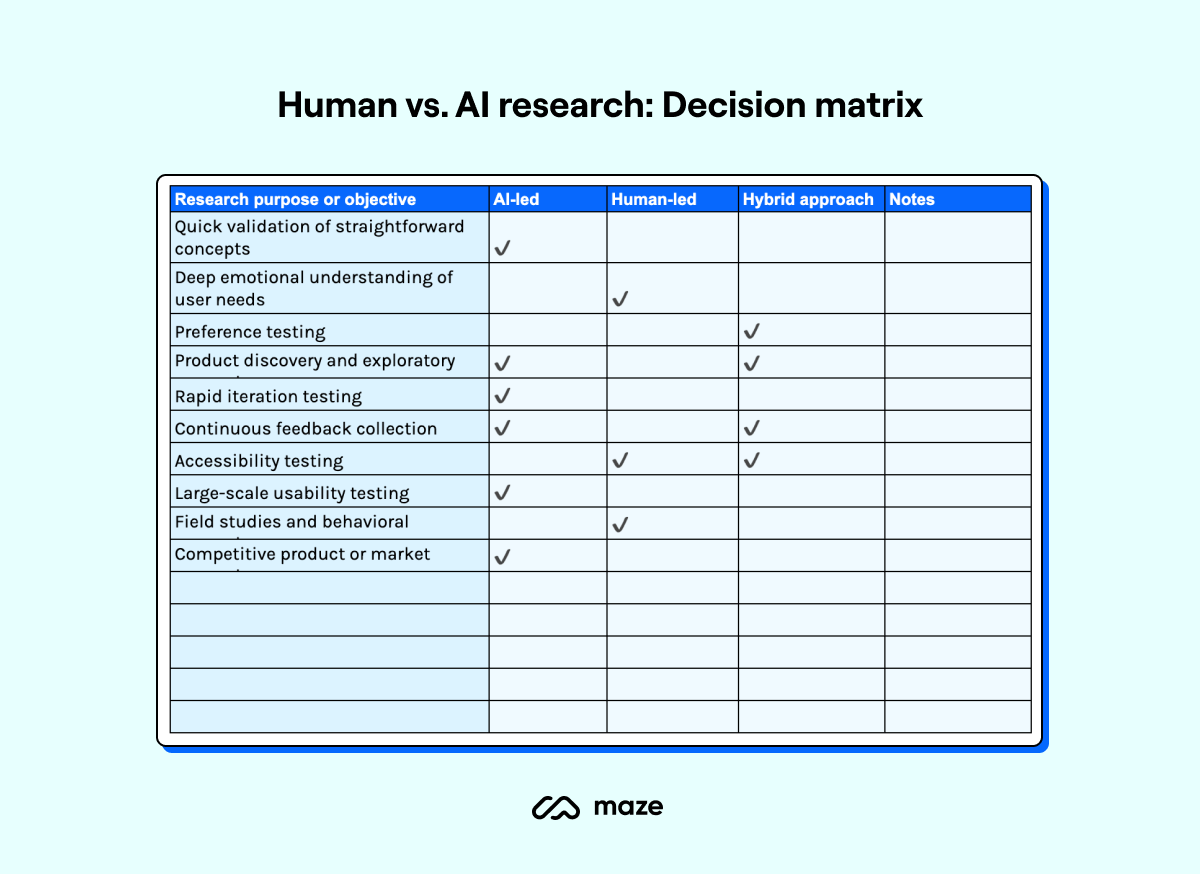

💡 Not sure when to use synthetic users?

Check out our decision framework for [when to use AI vs. humans for user research].

Maze’s principles for AI user research: Trust is a non-negotiable

We can’t wrap up this deep dive into the ethics of AI in user research without turning the mirror inwards—so let’s take a look at Maze’s approach to AI.

You’re in control: Whether it’s AI Follow-Up questions or an AI-moderated interview, each functionality allows you to set the rules, preview the conversation and build with your preferred practices.

Everything is trackable: Each insight Maze AI generates is traceable, allowing you to dive into transcripts, AI-generated takeaways or highlights, and follow the AI’s decision path to get there.

All of this seems simple in practice, but it’s the underlying philosophy that shows up in the features. Trust, and always being able to trace back to why decisions were made.

Jo Widawski

CEO & Co-founder at Maze

Share

Your data is never used to train AI models: The third-party providers Maze uses for AI will never use your study data (survey responses, test results, conversations, recordings) to train their models.

Your data is deleted after 30 days: Any data sent through third-party APIs is retained for a maximum of 30 days, then deleted permanently.

You can always opt out: Maze allows you to opt-out of specific AI features for yourself or your team, so you can control what you’re comfortable with.

We’re always evaluating: With strict human and AI-led review processes, Maze is always reviewing the quality, speed, and effectiveness of AI features.

Guardrails are built-in: Maze AI is meant to take the pressure off, not add to it—so rather than bombard you with instructions and tool tips, all the guardrails for quality and consistency are built into the product.

We’ve put in guardrails for AI to be as ethically-responsible as possible. If AI performance is the top level metric, then ethical responsibilities should be part of that evaluation process. That’s where we have very deliberate instructions we’re giving the AI, and we’re constantly evaluating the AI’s performance.

Peter Kildegaard

Senior Product Manager at Maze

Share

We cross-check between models: Maze uses OpenAI and Claude to power AI features, and cross-evalutes the outputs of both—meaning our reviews are never biased to one AI model.

Transparency is integral: We’ll never hide how or where AI is used—you can read all the nitty-gritty details right here.

We’re prioritizing value over hype: There’s a reason we waited before launching an AI moderator—getting it right was our priority, rather than shipping fast at the expense of your trust.

Maze AI can’t do everything—yet. But that’s on purpose. It came back to our philosophy of trust. We believe we need to build trust with our customers and users first, so we’ll do that by nailing a core use case, then once we’ve earned the trust, we’ll look to the next opportunity.

Peter Kildegaard

Senior Product Manager at Maze

Share

So, is it ethical to use AI in UX research?

AI might be the new co-pilot for your research practice, but that doesn’t mean commitments to ethical and inclusive user research are changing.

With the right boundaries and best practices in place, research teams can harness AI’s efficiency while maintaining the integrity and empathy that sit at the heart of user research.

- Treat AI as a supplement, not a substitute, for human expertise

- Implement robust guardrails including human reviews and informed consent

- Lead with trust and transparency—and choose tools that do the same

AI can become a really important tool in increasing and augmenting human capacity. If we push for ethical behavior and appropriate safeguards, it'll be like the switch from using paper to using computers.

Geordie Graham

Senior Manager, User Research at Simplii Financial

Share

Above all, focus on building ethical foundations that center your users. Speed means nothing without trust, and insights lose all value if they compromise the very people they're meant to serve.

Explore AI-powered research with built-in safeguards

Discover how Maze's AI features prioritize trust, transparency, and traceability while keeping you in control of your research process.

Frequently asked questions about AI ethics in user research

What are the 5 ethics of AI?

What are the 5 ethics of AI?

The five fundamental AI ethics are: Transparency (explainable AI decisions), Fairness (unbiased and equitable outcomes), Accountability (clear responsibility for AI actions), Privacy (protecting personal data and consent), and Beneficence (ensuring AI serves human welfare). These principles form the foundation for responsible AI development and deployment across all applications.

Will AI replace user researchers?

Will AI replace user researchers?

No, AI will not replace user researchers. While AI excels at data processing and pattern recognition, human researchers remain essential for empathy, critical thinking, stakeholder communication, and contextual understanding. AI serves as a powerful tool to augment researcher capabilities, handling routine tasks so humans can focus on strategic insights, relationship building, and nuanced interpretation of user needs.

How can organizations ensure transparency and explainability in AI-driven UX research?

How can organizations ensure transparency and explainability in AI-driven UX research?

Organizations should choose AI tools that provide traceable decision paths, maintain clear documentation of AI usage throughout research processes, establish human review protocols for AI-generated insights, communicate AI involvement to participants and stakeholders, and implement audit trails showing how AI reached specific conclusions. Regular evaluation of AI performance and bias detection also ensures transparent, accountable research practices.

How can AI bias impact user research?

How can AI bias impact user research?

AI bias can skew research insights by amplifying societal prejudices, homogenizing diverse user perspectives, and reinforcing existing assumptions.

To mitigate AI's bias, you can: use diverse, representative training data, implement bias detection algorithms, recruit inclusive participant samples, establish human review processes, test AI outputs against known biases, and supplement AI insights with human-led research targeting underrepresented groups.

How can AI impact privacy and consent in user research?

How can AI impact privacy and consent in user research?

AI complicates privacy through increased data processing capabilities, potential secondary use of personal information, difficulty in data deletion, and opaque decision-making processes.

You can protect research participant privacy by: updating consent forms to explicitly mention AI usage, offering tiered consent options, ensuring data isn't used for AI training without permission, implementing strong data security measures, and providing clear information about data storage, sharing, and retention policies.