TL;DR

UserTesting customers often complain about hidden costs, testing difficulties and bugs, and the quality of research participants. If you’re an existing user who agrees, or a prospective user who’s worried about running into these issues, we’ve listed the top UserTesting alternatives for you to consider.

Maze is a great alternative that solves these issues. Alongside Maze, other UserTesting alternatives include Lookback, Optimal Workshop, Lyssna, Loop11, Userfeel, dscout, Userlytics, Sprig, Trymata, Userbrain, Hotjar, and PlaybookUX.

We review these tools’ key functionalities, pros, cons, user reviews, and pricing to help you make an informed decision for your team’s UX research operations.

User research is an essential step in the product development process, and there are many UX research tools to help you along the way. While UserTesting is one option, it’s by no means the only tool for getting UX insights.

Every business has different needs—let’s find a solution that fits yours.

Why look for an alternative to UserTesting?

UserTesting has long been a familiar name in UX research, but teams often complain about:

- High and hidden costs

- Testing difficulties and bugs

- Inconsistent participant quality

These issues make it harder to get fast, reliable insights that match the speed of modern product development.

1. High and hidden costs

UserTesting’s pricing is a frequent reason teams look for alternatives. Due to UserTesting’s per-seat pricing model, costs can add up quickly—making it an unsustainable user research solution for many teams, as it limits research operations and democratization.

“The biggest downside is the cost, as the per-subject costs seem reasonable, but the per-seat licenses do not seem reasonable.” – Corey S., Enterprise Founder

Alongside this, users also complain about additional costs for more evolved research operations and needs. This is due to how features are packaged:

- Advanced includes fundamentals like moderated sessions, unmoderated tests, demographic filters, transcripts, sentiment analysis, and core integrations

- Ultimate adds AI-powered analysis, card sorting and tree testing, smart tags, Insights Hub, secure prototype hosting, approval flows, and advanced audience management

- Ultimate+ introduces team-wide unlimited testing, premium services, custom sourcing, and dedicated consultants

Moving from Advanced to Ultimate to Ultimate+ incurs costs that teams aren’t always prepared for—leaving them with a difficult decision: increase spending or limit research capabilities. Plus, users note that taking this step can be a difficult and time-consuming process.

“It's so hard to get a license! Negotiation takes forever, especially now with their new "session units" policy that makes me feel like I'm buying micropayments on a freemium app. If I had an alternative, I'd switch just because they're so hard to do business with.” ��– Verified Enterprise User

2. Testing difficulties and bugs

Alongside cost, users also find that UserTesting’s performance leaves a lot to be desired. While it offers a variety of research methods (but not all research methods), users observe that they often prioritize quantity over quality.

“It seems that they continue to just add more new products to their roadmap before fine tuning the existing products. For example, in the unmoderated features, there is no way to randomly present more than 2 different prototypes. You can only counter balance with two.” – Verified Enterprise User in Financial Services

Reviewers also mention that UserTesting’s own user experience isn’t ideal, with one user calling it a “powerful tool that would benefit from its own purpose." The user, a Senior UX Designer, goes on to explain that “it can be difficult to know where to find tools, there are inconsistencies in test organization/folder structure, and some features are just buried behind a 'clean' UI.”

Beyond the tester’s experience, users also report issues for participant testers, such as “different bugs (that) occur during the recordings.” Not ideal when you need insights fast.

3. Inconsistent participant quality

While UserTesting offers a large tester pool, multiple reviewers say the quality isn’t consistent. Some participants rush through studies, give shallow feedback, or aren’t genuine profiles.

“One downside is the quality of participants at times. There are professional test takers and bots trying to simply make money.” — Lucas L., Enterprise User

Poor-quality feedback wastes both time and paid credits, especially when every unusable session adds to overall project costs. That being said, users do report that “UT has great functionality built-in to alleviate these situations to replace a tester at no cost or fix the issue.” However, that doesn’t make up for the lost time and effort required to get in touch and restart studies.

Now that we know why so many UserTesting customers consider switching, let’s look at the best alternatives that can help you run research faster, automate analysis, and scale insights across your whole product team.

13 Best UserTesting alternatives

Here’s a quick overview of the best UserTesting alternatives:

UserTesting alternatives | Best for | Pricing |

|---|---|---|

Maze | Teams that want an all-in-one research platform combining prototype testing, usability studies, and AI-powered analysis to move from idea to insight fast |

|

Lookback | Teams focused on qualitative, video-based studies |

|

Optimal Workshop | Researchers improving information architecture and navigation |

|

Lyssna (formerly UsabilityHub) | Smaller teams that need quick, structured usability tests and early feedback |

|

Loop11 | Teams running task-based usability testing across web and mobile, without needing advanced logic or IA tools |

|

Userfeel | Small teams or freelancers who need quick, affordable user feedback without long-term commitments |

|

Dscout | Enterprises and large teams that want to run remote qualitative testing |

|

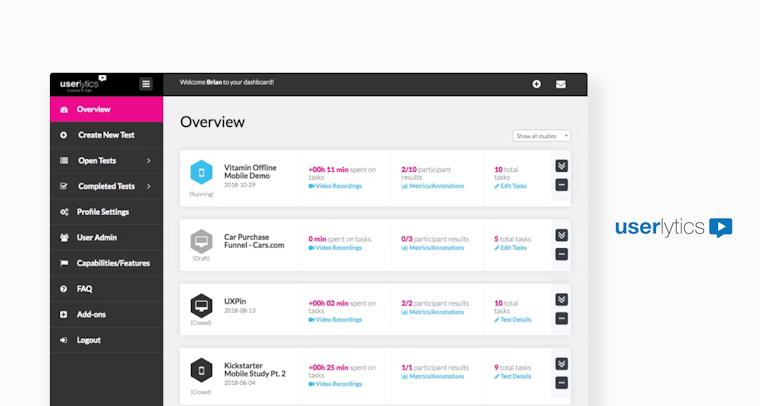

Userlytics | Large or growing teams running continuous, mixed-method UX research across global audiences |

|

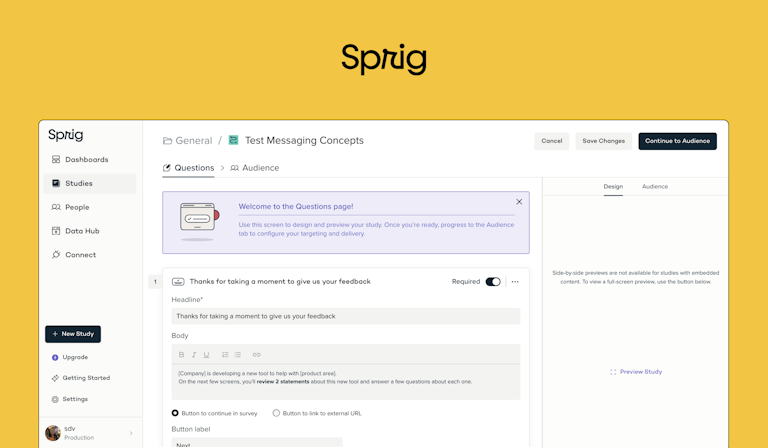

Sprig | Product and growth teams that need in-product, AI-powered feedback loops |

|

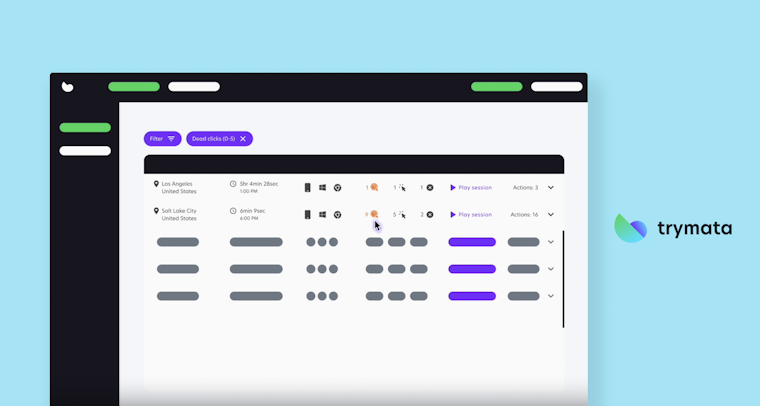

Trymata (formerly TryMyUI) | Scaling UX research across larger teams or agencies |

|

Userbrain | Teams focused exclusively on unmoderated usability testing |

|

Hotjar | Small to mid-sized product and UX teams that need only unmoderated insights into real user behavior |

|

PlaybookUX | Mid-sized teams running remote UX studies and have the budget for advanced features. |

|

1. Maze: Best research platform for comprehensive, AI-supported user research

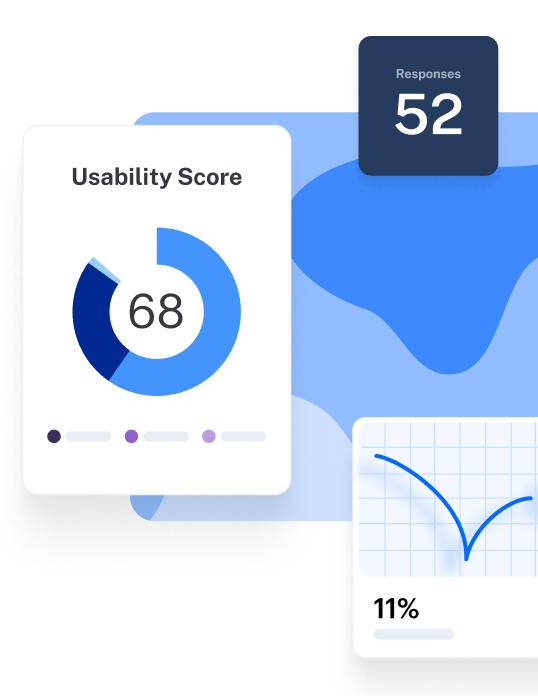

Maze is the leading user research platform helping UX researchers, UX designers, and Product teams uncover user insights at the speed of product development. It offers qualitative and quantitative research methods, and supports both moderated and unmoderated testing.

With Maze AI, researchers can automate key parts of the research and testing process, like summarizing qualitative feedback, identifying themes, and generating follow-up questions. Maze’s AI moderator can automatically build discussion guides, conduct real-time interviews across time zones, and deliver structured insights you can trace, edit, and trust.

Alongside the extensive suite of user research methods, Maze is also intuitive to use, offers 50+ pre-built testing templates, and integrates with your team’s favorite tools like Figma, Notion, Slack, and more. Plus, you can test next-gen AI prototypes built with tools like Figma Make, Lovable, and Bolt, capturing how real users interact with AI-generated designs before you invest in development.

Pros

- Offers comprehensive research methods in one platform including prototype testing (remote usability testing complete with video Clips), card sorting and tree testing (improve IA), interview studies (live interviews with AI-powered transcription and summaries), live website testing, and mobile usability testing (website and app testing), and surveys (fast feedback with minimal lift)

- Supports real-world scale with a global participant panel, Maze Panel, of 3M+ high-quality participants, 400+ targeting filters, and Reach for CRM-style participant management

- Powerful AI capabilities including the Maze AI suite for automated reporting and synthesis, Maze’s AI moderator for scalable 24/7 interviews, and AI-assisted question design to reduce bias and improve research quality

- Seamless integrations with design and product workflows including Axure and Figma, and AI-prototyping tools such as Figma Make, Bolt, and Loveable

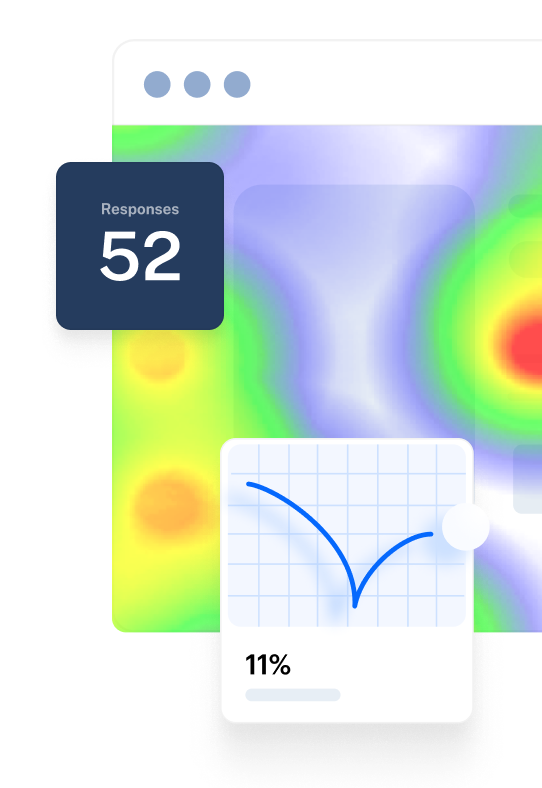

- Delivers faster insights with automated reports that include usability metrics like task-level success, misclicks, path analysis, heatmaps, and insight summaries

Cons

- No built-in video interview hosting (but integrates with Zoom, Google Meet, and Microsoft Teams)

What do Maze user reviews say?

Maze customers highlight the platform’s speed, collaboration, and cost efficiency as the biggest advantages.

Maria C., a Product Designer shares, “Maze is easy to use, with a variety of testing types. I love the Figma connection and the AI follow-up questions, it helps organize answers clearly.” Some reviewers also point to areas that could improve. Marledvuka L., a Field Operations Manager, notes limitations on the free plan. He says, “Maze helps us validate ideas quickly, but the free plan lacks the intensive testing capabilities we sometimes need.”

Pricing

Maze offers pricing options for organizations and product teams of all sizes:

Free | Organization |

|---|---|

0$/month | Custom pricing |

- 1 study per month - 5 seats - Essential prototype testing - Surveys - Pay-per-use panel credits | - Custom study quantities - Unlimited seats - Access to 3M+ global panel (or bring your own for free) - Moderated interviews - AI-moderated interviews - Prototype testing - Surveys - Card sorting & tree testing - Mobile experience testing - Audio, video & screen recording - All Maze AI features - Automated analysis & presentation-ready reports - Enterprise security & controls - Priority support, dedicated CSM & research partner access |

Comparing Maze with UserTesting

The verdict: Choose Maze if you want both moderated and unmoderated testing and reporting capabilities. Maze is also a user-friendly alternative if you want to enable more people from your team to conduct continuous research at any time in the development lifecycle.

When comparing UserTesting to Maze, there are some key differences to consider between the two tools:

- Maze is more affordable, with free and custom plans compared to UserTesting’s typical contracts, which average $40,000 per year

- Maze integrates with Figma and Axure and supports AI prototyping with Bolt, Figma Make, Lovable, and Replit. UserTesting only connects to Canva, Figma, FigJam, and Miro

- Maze automatically generates reports and delivers deeper insights, bringing the most relevant test insights into one place, ready to download and share with key stakeholders in real time

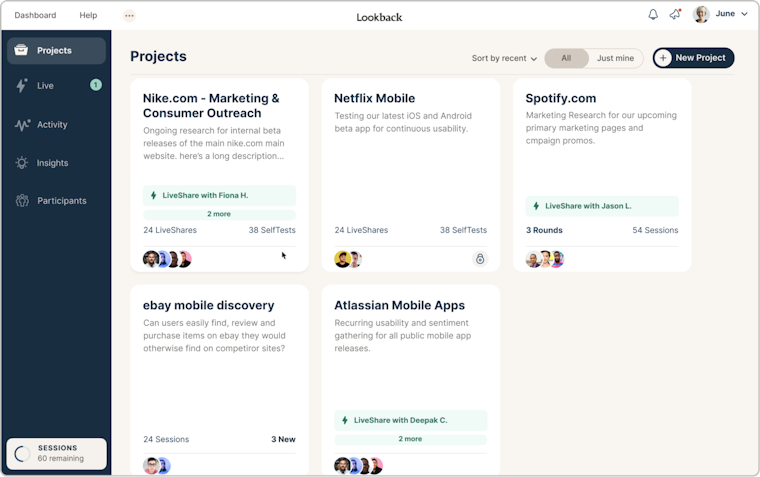

2. Lookback: Best for only qualitative research

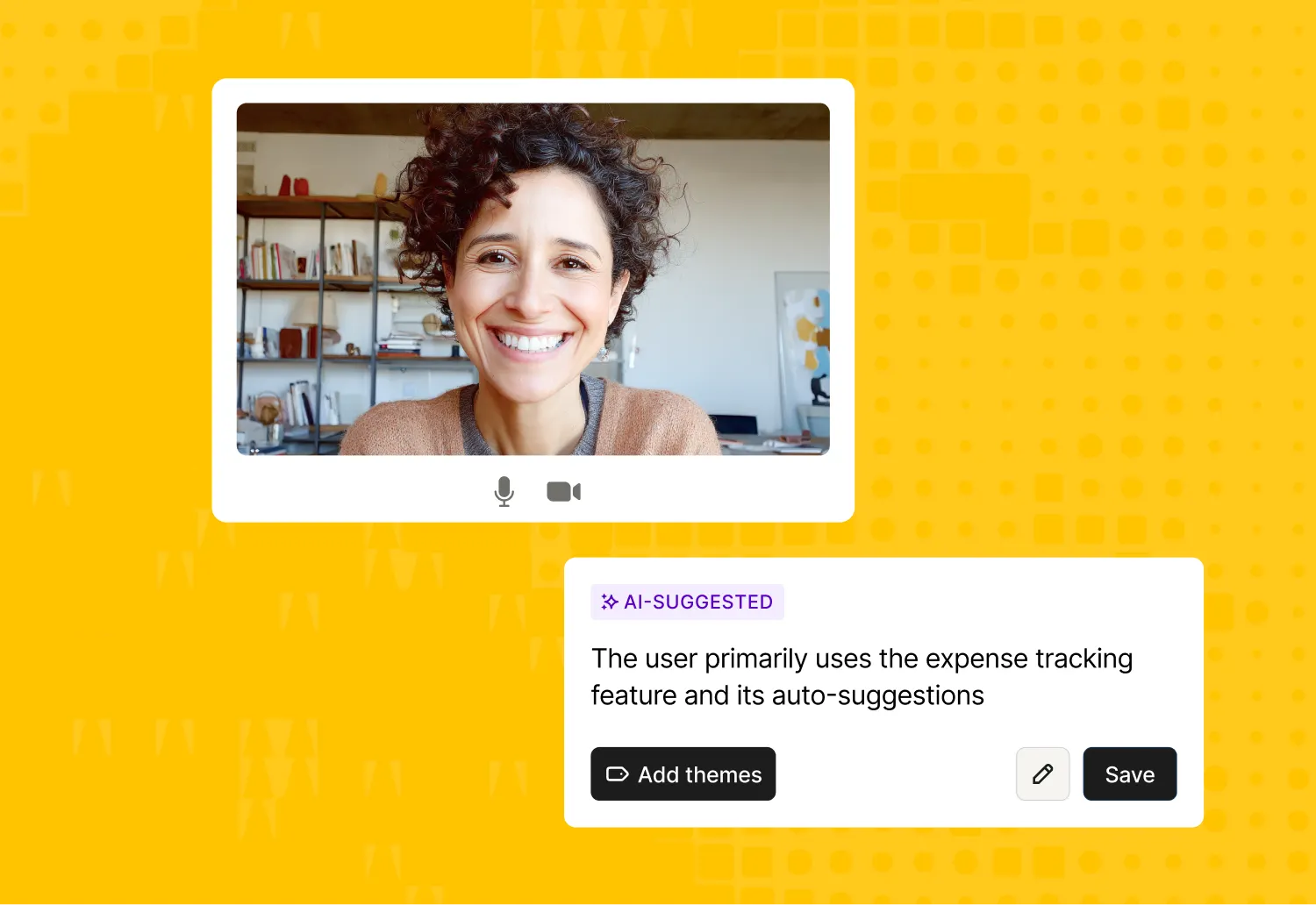

Lookback is a user research platform that places a stronger emphasis on qualitative research methods and in-depth user interviews. Teams can host moderated or unmoderated sessions, collaborate through a live 'observer lobby,' and take time-stamped notes while watching participants interact with prototypes or products. Its Eureka AI features speed up analysis with automatic transcripts, highlights, and summarized insights.

Pros

- Integrates with Respondent for participant management

- Supports both moderated and unmoderated user sessions

- Provides AI-assisted transcription and auto-notes via Eureka

- Enables live observation and collaboration through an observer lobby

- Stores and organizes recordings, notes, and highlight reels for easy sharing

Cons

- Does not support quantitative testing methods such as time-on-task measurement, SUS scoring, first-click tests, or structured survey questions

- Most reviews across sites like G2 date back to 2022 or earlier, suggesting slower development and potential stagnation in recent years

- Users report plugin errors, connectivity drops, and setup confusion, especially for less tech-savvy participants

What do Lookback user reviews say?

While Lookback has been appreciated for its user research features, it's important to note that the most recent reviews available are from 2022, and therefore could be considered outdated.

Julian, a UX Consultant in the UK, says, “It’s good for moderated usability testing, allowing full screen (and face) recording of users as they explore sites. The tools for taking notes are good, with timestamps and allowing additional viewers from our team to make notes as sessions take place.”

Some reviews highlight technical challenges that affect user experience. For example, Demet E. points out issues related to the required plug-in, stating, "Users have a lot of difficulty with the plug-in, which sometimes causes no-shows." This suggests a barrier that could deter participants from successfully joining research sessions. Similarly, Taylor J. says "Some users had trouble getting into a session through the tool... it wasn't easy to troubleshoot."

Pricing

Lookback’s pricing plans are billed annually and come with five free research sessions:

Freelance | Team | Insights Hub | Enterprise |

|---|---|---|---|

$299/year | $1,782/year | $4,122/year | From $18,150/year |

- 10 sessions (moderated or unmoderated) - Add 10-session packs for $299/package ($29.90/session) - 1 panel participant included - Bring your own participants free - Recruit additional participants: $49 each | - 100 moderated sessions - Add 10-session packs for $178/package ($17.80/session) - Unlimited unmoderated sessions - 10 panel participants included - Bring your own participants free - Recruit additional participants: $38 each - 10 collaborators - Unlimited guests - Security Self-Assess - Chat support | - 300 moderated sessions - Add 10-session packs for $137/package ($13.70/session) - Unlimited unmoderated sessions - 30 panel participants included - Bring your own participants free - Recruit additional participants: $31 each - 30 collaborators - Unlimited guests - Security Assessment Assist - Priority chat support | - Unlimited moderated + unmoderated sessions - 200 panel participants included - Bring your own participants free - Recruit additional participants: $25 each - All core features - Custom seat configuration - Security review - Custom legal - SSO- Invoicing/PO - Team training - Audits- CSM support |

Lookback vs. UserTesting

Lookback and UserTesting are similar in terms of testing types. Both focus on remote moderated testing but offer some unmoderated capabilities. However, Lookback’s prices are much more affordable for small businesses.

Unlike UserTesting or Maze, Lookback doesn’t have a pool of testers available, meaning you’d need to recruit test participants separately or through an agency.

They both have good video capabilities, but Lookback’s live and time-stamped comments allow for easier navigation of video user research.

The verdict: Choose Lookback if you need to easily scan your customer interviews. If you need multiple team members to have access to the tool and conduct user research, Lookback might be a more cost-effective solution.

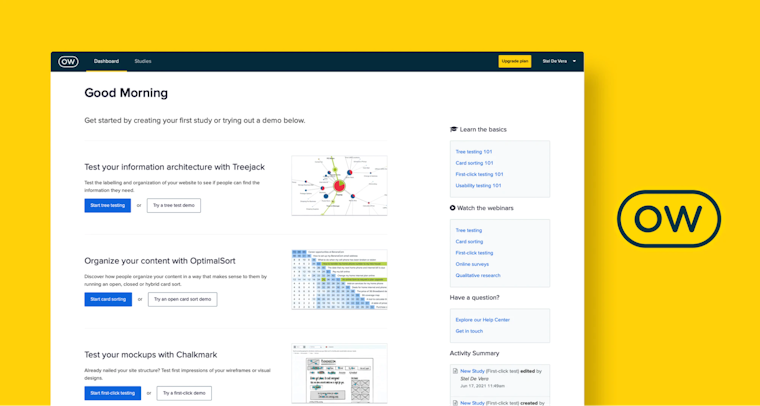

3. Optimal Workshop: Best for assessing information architecture (IA) and early-stage usability

Optimal Workshop is a testing tool for helping research teams design and validate information architecture with card sorting and tree testing. Beyond IA, it also supports first-click testing, surveys, and qualitative analysis to capture user behavior, attitudes, and insights across the discovery process. However, since its main strength is IA and early testing, teams looking for broader research methods like live website testing, advanced prototyping, or deep tool integrations, may need to pair it with other UX platforms.

Pros

- Integrated participant recruitment and solid documentation for best practices

- Intuitive interface and easy setup with consistent updates that improve usability

- Data visualization and reporting help teams identify patterns and present results to stakeholders

Cons

- Analytics and data export limitations, users report needing to export raw data for deeper analysis

- Participant quality issues, with reviewers noting some low-effort responses in panel studies

- Performance slowdowns in large or complex studies, especially when exporting big datasets

What do Optimal Workshop users say?

Users often describe Optimal Workshop as easy to use and dependable for card sorting, tree testing, and early usability studies. Ines K., a Lead Product Designer, appreciates the platform’s simplicity, saying, “The survey and card sorting tools are easy to set up, and the platform keeps improving. The only drawback is the analytics. I often need to export data for deeper analysis.”

Pricing

Starter | Enterprise |

|---|---|

$199/month (billed annually) | Custom pricing |

- 5 studies launched per year - Unlimited seats - Unlimited participant responses per study - All tools and study types- AI-powered analytics and insights - SOC II / GDPR compliance | - Custom study bundles - Unlimited seats - Unlimited participant responses per study - All tools and study types - Everything in Starter - Enterprise-grade security - Multiple workspaces and private projects - Administrator controls - Usage reports - Dedicated onboarding and setup - Dedicated Customer Success Manager |

Optimal Workshop vs. UserTesting

The verdict: Pick Optimal Workshop if you’re a small team that’s just starting to incorporate user research and you’re working with a limited budget. If your product team has over 15-20 seats, or you’re looking for more nuanced prototype feedback, try a solution like Maze to increase the quality of reports and insights.

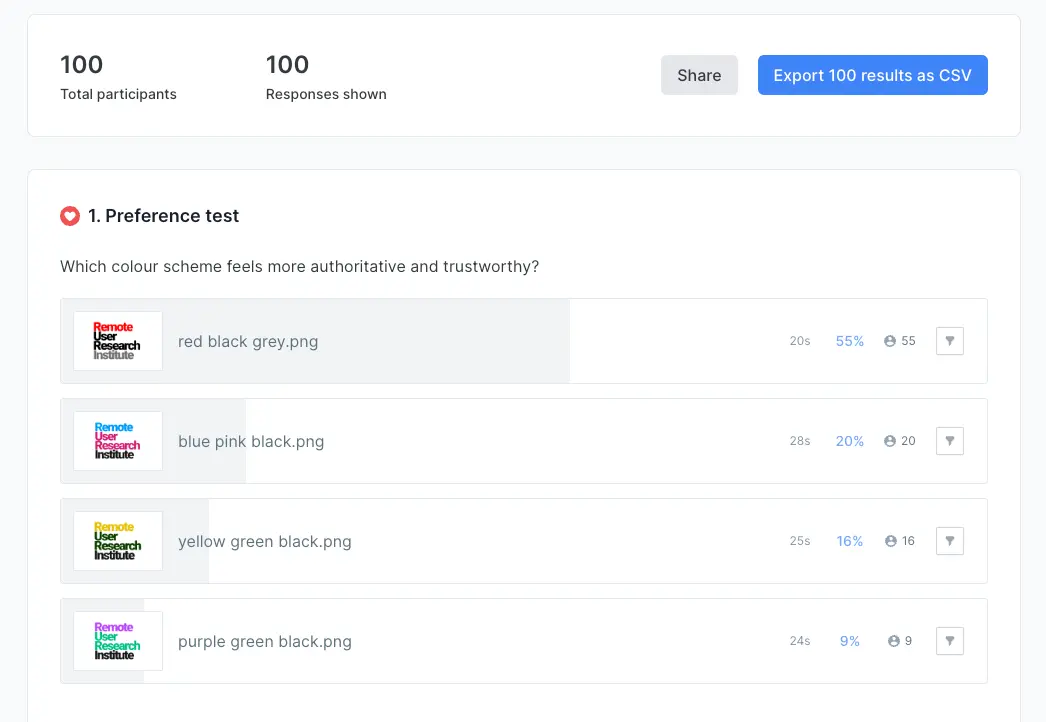

4. Lyssna: Good if you need quick design validation and early concept feedback

Lyssna is a quick, easy-to-use UX research platform built for teams that need to validate designs and concepts fast. It supports moderated and unmoderated tests, including first-click, five-second, preference, prototype, and card sorting studies, all accessible through simple templates.

Pros

- Supports both moderated and unmoderated studies

- Large participant panel with 690,000+ users and 395+ demographic filters for fast recruitment

- Offers a wide range of test types: first-click, five-second, preference, surveys, and prototype tests

Cons

- No autosave; users can lose progress during setup

- Limited AI features, only summaries and follow-up questions

- Minimal customization for screens, transitions, and task logic

- Limited integrations as it only connects with Figma, Zoom, Microsoft Teams, Microsoft Outlook, and Google Calendar

What do Lyssna users say?

Lyssna users highlight its simplicity and speed as major strengths. Amanda D., an Associate Creative Director, described it as “so darn intuitive to use” and appreciated the built-in templates that make it easy to run studies without much setup.

But not everyone finds Lyssna perfect. Aileen M., a UX Research Consultant, said it’s “comparable to more powerful tools, just shy on some functionalities,” pointing out missing logic controls and limited screener flexibility. Similarly, Jamie L., a UX Designer, mentioned frustration over losing work when her browser timed out, wishing for an autosave feature.

Pricing

Free | Starter | Growth | Enterprise |

|---|---|---|---|

$0 | $99/month | $199/month | Custom pricing |

- 1 study per month - 3 seats included - 15 self-recruited responses - Core methodologies - Interviews - Live chat support - GDPR compliant - Section groups & randomization - Spaces | - 1 study per month - 5 seats included - Unlimited self-recruited responses - Everything in Free - AI follow-up questions - CSV exports - Custom welcome & thank-you messages - Interview co-hosts - Live website testing - Recordings - Variation sets - Conditional logic | - 3 studies per month- 15 seats included - Unlimited self-recruited responses - Everything in Starter - AI-generated summaries - Custom variables - Custom messages - Recruitment limits - Role-based permissions - Test redirects | - Custom study limits - Unlimited seats - Unlimited self-recruited responses - Everything in Growth - Agreements - Closed Spaces - Custom branding - Custom contract terms - Password rules - Priority support - Security audit assistance - SOC2 report - Single sign-on (SSO) - Wallets |

Lyssna vs. UserTesting

The verdict: Lyssna is much more affordable than UserTesting. Both support moderated and unmoderated research, but Lyssna focuses on quick, template-based tests and faster feedback. It’s the better choice for lean teams needing affordable, fast validation, but consider other alternatives on our list for running in-depth, video-led studies.

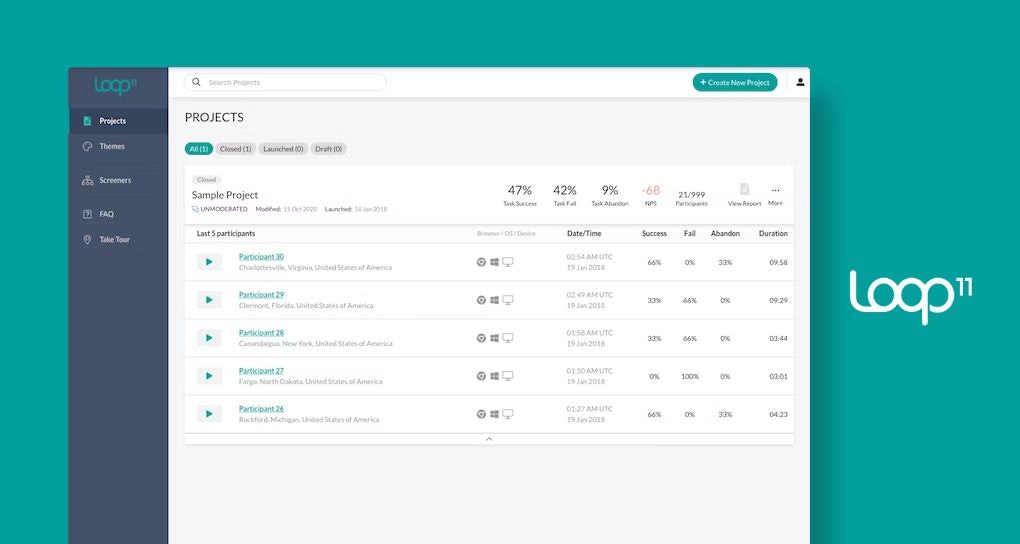

5. Loop11: Works best for simple usability studies, not end-to-end research

Loop 11 supports both moderated and unmoderated studies, capturing screen, audio, and video recordings so teams can analyze user behavior in context. You can run tests across desktop, web, iOS, and Android. You can also bring your own participants or recruit through Loop11’s panel, making it flexible for internal and external testing. However, Loop11 focuses mainly on task-based testing, meaning it doesn’t include IA methods like card sorting or tree testing, and its survey logic is more limited than some all-in-one UX suites like Maze.

Pros

- Easy to set up and use for quick website and prototype testing

- Supports both moderated and unmoderated studies across web and mobile

- Useful screen recording and analytics tools like heatmaps and navigation paths

Cons

- No card sorting, tree testing, or advanced survey logic

- Lacks accessibility and responsiveness testing features

- Limited customization and API capabilities for regression testing

What do Loop11 users say?

Many reviewers see Loop11 as a simple tool for quick usability testing. Akhil H., a small-business user, praised its simplicity, saying “Loop11 is very easy to use with its in-built AI project creator, which sets up detailed tasks and activities in seconds.”

But not all feedback is glowing. Judith E., an Organizational Economist, described it as “a cumbersome tool with limited features,” citing weak accessibility and responsiveness testing and calling its pricing “not reasonable.” Similarly, Anno C., a Small-Business Manager, found the platform “frustrating to integrate for effortless testing,” pointing out lag and limited customization options.

Pricing

Loop11 offers three main pricing models, all with a 14-day free trial:

Rapid Insights | Pro | Enterprise |

|---|---|---|

$179/mo billed annually (includes 5 seats) $199/mo billed monthly | $358/mo billed annually (includes 5 seats) $399/mo billed monthly | Custom pricing |

- Moderated & unmoderated testing - Unlimited tasks & questions - 3 projects per month - Up to 10 participants per project - 2-hour moderated sessions - Virtual observation room - 3 observers | Everything in Rapid Insights, plus: - Heatmaps, clickstreams & path analysis - Data export - 10 projects per month - Up to 100 participants per project - 3 observers | Everything in Pro, plus: - Unlimited projects per month - Unlimited participants - Unlimited moderated observers - SSO - Report segments - Advanced security features - Personalized support |

Loop11 vs. UserTesting

The verdict: If you want to run remote moderated and unmoderated tests at an accessible cost, then it’s worth considering Loop11 over UserTesting. However, if you need more in-depth moderated testing analytics and have the budget, another tool on this list might be a better choice.

6. Userfeel: Pay-as-you-go usability testing platform

Userfeel runs on a pay-as-you-go model for unmoderated or moderated studies; no annual subscription. You can test websites, apps, and digital products, recruit from Userfeel’s global participant panel, or bring your own participants. Each session includes screen and voice recordings, session metrics, and real-time insights for quick decision-making.

Pros

- The biggest advantage of this platform is the pricing model—you don’t need to commit to a monthly or yearly subscription, you can simply pay for the tests you run, whenever you need

- It has a participant pool of over 150k global people, but you’re also allowed to use your own testers’ pool

- You get access to unlimited users and seats per paid-for test

Cons

- According to reviews, users have issues filtering participants with limited screening options

- Pay-as-you-go pricing can be a double-edged sword, especially if you aim to perform tests continuously. It can end up being more expensive in the long term than paying a fixed price. If a project takes longer, or requires more research than initially planned, you can end up running out of budget before getting the insights you need

- Participants report performance issues with Userfeel’s testing app

What do Userfeel users say?

While the platform has its strengths, user reviews suggest that there are some areas where Userfeel could improve to better serve its users' needs. Krezcely B. says that there can be delays in deploying tests that might affect users who require quicker turnaround times.

Another user Gerard G. highlights a need for improvement in Userfeel’s functionality, "The transcripts need some work.” They also said that the platform does not support tree testing, which could limit its utility for more research tasks.

Pricing

Userfeel runs on a pay-as-you-go model at $60 per credit. All plans include unlimited screener questions, demographics, and team members.

Pay-As-You-Go | Agency and Frequent User Discounts |

|---|---|

$60/credit | 50+ credits: $55.20/credit 100+ credits: $50.40/credit 200+ credits: $45.60/credit |

Credits per session: - 60-min unmoderated (your tester): 0.5 credits - 60-min moderated (your tester): 1 credit - 20-min unmoderated (Userfeel panel): 1 credit - 40-min moderated/unmoderated (Userfeel panel): 2 credits - 60-min moderated/unmoderated (Userfeel panel): 3 credits | Same session types apply, billed at discounted per-credit rates |

Credits per survey: - 10 survey responses: 1 credit | Same survey pricing, billed at discounted per-credit rates |

Userfeel vs. UserTesting

The verdict: Pick Userfeel if you’re not testing your platform frequently and want flexible, pay-as-you-go pricing. If, however, you follow a more continuous approach to testing, it’s worth going with any of the other platforms in this list.

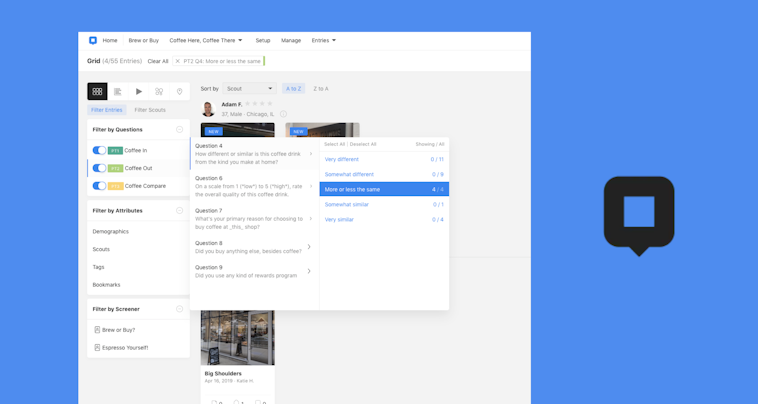

7. Dscout: Best for diary studies

Dscout is a remote qualitative research platform that supports research via mobile and desktop. It offers research tools like Diary, Live, and Express missions, with a global participant pool for rich, in-context user insights.

Pros

- Integrates with tools like Slack and Miro for enhanced collaboration

- Provides analytics features like automatic transcriptions and video playlist editing

- Delivers insights quickly through Express missions that can be completed in 24-48 hours

- AI-assisted research features for participant selection and insight synthesis that speed up analysis

Cons

- Faces challenges in recruiting participants from outside the US and Canada, which can limit global studies

- Complex study setup and approval workflows, including delays from screener vetting and manual participant placement.

- New users may find the platform's extensive features overwhelming to learn and integrate initially

- Limited niche recruitment and missing A/B or preference testing options compared to other testing platforms.

Pricing

Dscout doesn’t share public pricing. Plans are custom and typically structured as annual subscriptions with seats, activity credits, and optional research services.

Third-party benchmarks suggest contracts average around $50,000 per year, with ranges from $25,000 to $100,000+, depending on usage, support, and team size. For smaller projects, one-off studies start at roughly $3,000.

What do Dscout users say?

Some users, like Fynn M., raise concerns about the functionality of dscout's video clipping tool, stating, “It cuts off some words at the beginning and end of clips." Another user Sarah S. discusses interface challenges, explaining, "full tag names aren't visible unless they're short, which complicates the tagging process."

Dscout vs. UserTesting

The verdict: Dscout is a solid option for enterprises and large teams that want to run remote qualitative testing. If you're on a tight budget, other tools give you access to more features like card sorting, design tool integrations, prototype testing, and live website testing for less upfront.

8. Userlytics: Best for a large paid-to-test panel

Userlytics lets researchers collect qualitative and quantitative insights by conducting moderated and unmoderated tests on various digital assets, including websites, mobile apps, and prototypes. With a panel of 2M+ users and features like card sorting, tree testing, and device-specific testing, Userlytics helps teams identify UX issues and improve customer engagement. The platform also provides advanced metrics and graphical reports for effective data analysis.

Pros

- Provides AI-based insights for efficient data analysis

- Offers a large panel of 2M+ participants for diverse user feedback

- Allows session flagging and recordings for easy review and sharing of key moments

- Integrates with popular design tools like Adobe XD, Figma, InVision, and Sketch for seamless prototype testing

Cons

- Difficult to modify tests once launched

- Lacks multilingual transcription options in subscription packages

- Pay-as-you-go plan may not be cost-effective for continuous research

- Testers must download an app to participate, which can reduce completion rates

- Advanced research methods like prototype testing, card sorting, and tree testing are only available in expensive plans

What do Userlytics users say?

Most reviewers highlight Userlytics’ ease of use and fast turnaround. Erica M., Marketplace Operations Coordinator, said the platform “made setup simple, even for a first-time user, and delivered valuable insights we could act on immediately.”

However, Rick R., Digital Merchandising Manager, mentioned that “poor video and audio quality sometimes makes it hard to understand participants.” Similarly, Russ B., UX Director, noted that “requiring users to download software before joining sessions isn’t ideal and can slow down testing.”

Pricing

Project-based | Enterprise | Premium | Limitless |

|---|---|---|---|

Custom pricing | $34/session Unlimited sessions | $699/month | Custom quote |

- Best for one-off projects - No subscription - Custom session pricing - Panel recruitment available | - Most popular plan - Unlimited sessions - Self-recruitment available - Panel recruitment free on plans over $7,500 - Buy 1, Get 1 FREE promotions - Cost calculator available | - 5 seats (10 seat option available) - 20 panel recruits/month - Unlimited accounts - Custom add-ons | - Unlimited accounts - Unlimited tests - Self-recruitment available - Panel recruitment available - unlimited usage |

Userlytics vs. UserTesting

**The verdict: **Choose Userlytics if you want to run both unmoderated and moderated testing from one platform. If you want a tool that enables you to manage your own participants in a native database, consider another tool on our list.

9. Sprig: Best for in-product experiments

Sprig empowers organizations to gather real-time insights through targeted microsurveys, concept tests, and in-product experiments, directly within their digital products like websites and apps. The platform supports event-triggered surveys and A/B tests, enabling companies to make data-driven decisions rapidly. Sprig's analytics dashboard offers reporting tools that visualize user feedback to pinpoint usability issues and improve user engagement efficiently.

Pros

- Automatic grouping of responses and trend detection

- Supports quantitative and unmoderated user testing through rapid in-product surveys and user feedback collection

Cons

- Lacks customizable and detailed reporting options

- Interface can be clunky when creating multiple surveys, moving questions, or adding audience tags

- Occasional errors when reordering survey questions or running multiple complex surveys

What do Sprig users say?

While many users appreciate Sprig's capabilities, some have faced challenges or identified areas where the platform could improve to better meet their needs.

Zina A., a UX Researcher, pointed out that “question skip logic needs debugging as it resets while the survey is running,” while another reviewer in financial services noted that “reporting dashboards are good for high-level patterns but too light for deeper qualitative analysis.”

Pricing

Sprig no longer lists pricing publicly on its website. However, historical data from 2024 indicates the following tiers:

- Free plan

- Starter plan: $175/month includes 2 in-product studies, 25,000 MTUs, mobile delivery (available for an additional $200/month), unlimited link surveys, and unlimited seats.

- Enterprise plan: Custom pricing

Sprig vs. UserTesting

The verdict: UserTesting’s pricing is aimed at enterprises and larger teams able to invest more. Sprig is more affordable, but offers less value than other tools. Maze is the best of both worlds. It’s more cost-effective than both tools, while also offering a large range of user research methods.

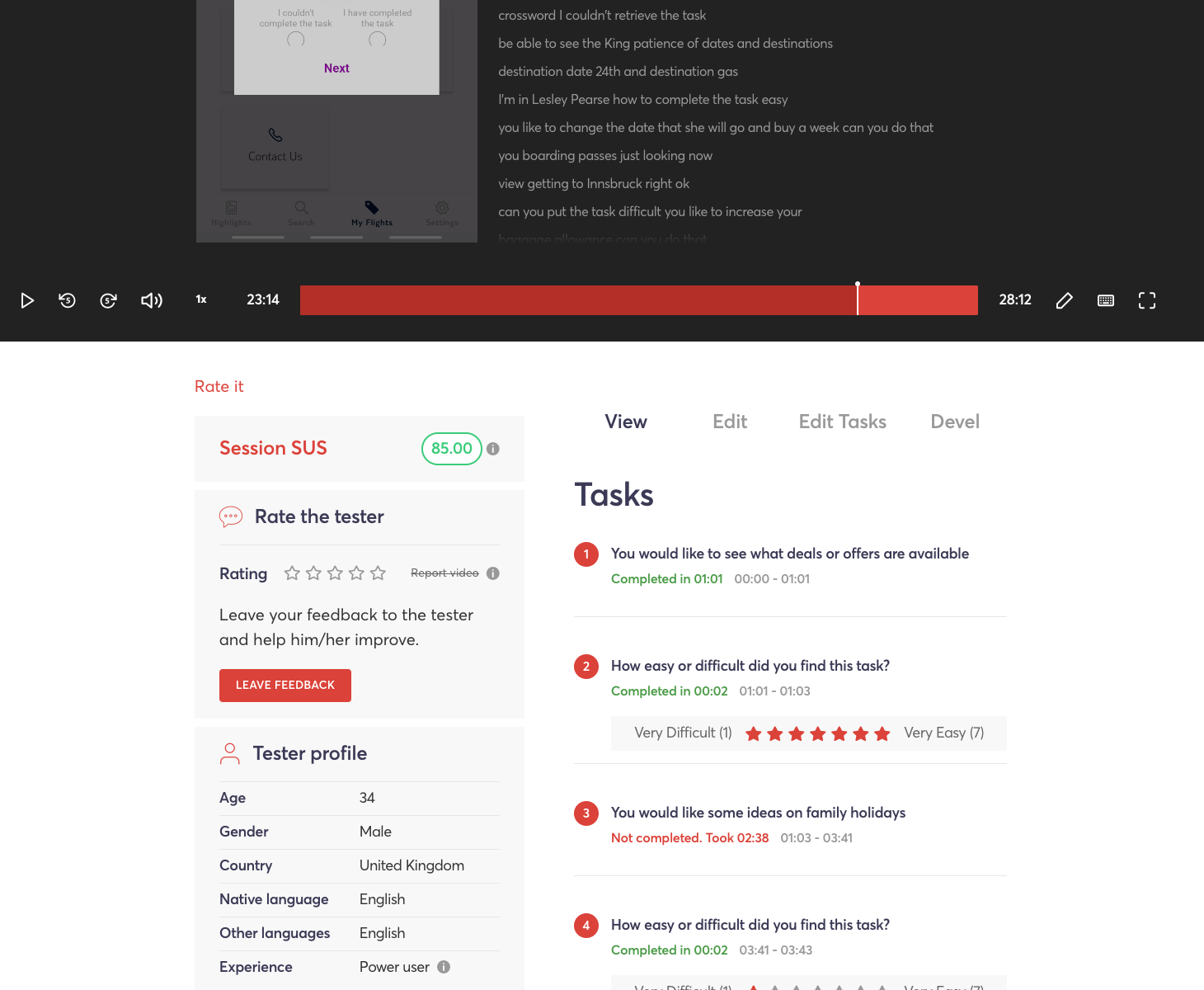

10. Trymata (formerly TryMyUI): Best for testing customer journeys

Trymata, formerly TryMyUI, offers unmoderated testing capabilities to capture a wide range of user interactions. It also supports quantitative and qualitative analysis. Trymata's tools make it easy to identify usability issues, optimize user journeys, and ensure that your products meet user expectations.

Pros

- Versatility in moderated and unmoderated usability testing

- Intuitive and easy to navigate

- Provides immediate and actionable insights from user feedback

- Rich data collection through features like screen and voice recordings

Cons

- Cost can be a barrier for smaller companies or teams with limited budgets

- Inability to access data from previous tests once an account is terminated

- Limited user testing templates library

- Limited re-test opportunities as once a test is completed, any errors cannot be corrected. This is a challenge if initial test results are not satisfactory.

- Several reviews are under its former name TryMyUI rather than TryMata, dating back to 2023 or earlier

What do Trymata users say?

Many users mention limited tests and the unavailability of slots, which suggests a need for better management of test availability and user engagement. Roszaimy Y. says, "The prototypes often encounter errors such as unresponsive buttons and dead links." Other users comment on the platform's customer support, describing it as less than satisfactory.

Pricing

Pricing based on monthly billing:

Team | Enterprise | Unlimited | Agency |

|---|---|---|---|

$399/mo- Team-level license to Trymata usability testing suite10 Trymata panel testers/month | $1,667/month (billed annually)- Enterprise-level license to Trymata usability testing suite 360 Trymata panel testers/year | $3,333/month (billed annually)- Unlimited-level license to Trymata usability testing suite All Enterprise-level features & benefits | Custom Manage unique testing licenses for all your clients |

Trymata vs. UserTesting

The verdict: Trymata is more transparent in its pricing and less expensive than UserTesting. Plus, with Trymata, you can buy additional credits at $45 each if you need more testers, which is a decent option compared to UserTesting, where add-on sessions and seat-based licenses are bundled into custom annual contracts that often exceed ~$40,000/year.

It’s important to note that unlike Maze, neither Trymata or UserTesting have direct integrations with major design tools to test prototypes. If you need a larger participant panel, and moderated and unmoderated testing with a variety of research methods, consider another tool on our list.

11. Userbrain: Best for multi-device testing

Userbrain is a user testing platform that focuses on usability testing. Similar to UserTesting and Maze, this platform enables teams to recruit and test participants on the same platform.

Once tests are completed, video recordings of the sessions, along with transcripts and automated insights, become available on the Userbrain dashboard. This feature helps product teams identify bugs, validate design choices, and gather user feedback efficiently across multiple devices, including phones and tablets.

Pros

- Offers simple and intuitive interface for quick set up

- Provides test templates to help you get started

- Fast turnaround times, within 24 hours for faster iterations

- Provides clear and reliable video recordings of user interactions

- Allows for task customizations like specific instructions, different question types, and tasks that require screen recording

Cons

- No live chat support

- Limited demographic filters with options such as 18–34 or 35–54, which are too wide for some research needs

What do Userbrain users say?

It’s worth noting that Userbrain has only about 15 reviews on G2, which makes its public feedback base relatively small and may not fully represent the platform’s current performance or feature maturity.

Users appreciate the mobile app support for testing real app features without having to design and prototype an elaborate test. However, some users like Chris C. complain about the quality of tests and want an option to invite their own testers via a bulk or automated email invitation feature.

Pricing

Free | Pro | Enterprise |

|---|---|---|

$0/month | $125/month | Custom pricing |

- Get Userbrain testers on demand - Test with your own users - 5 collaborator seats - AI notes & clips - Automated test reports- AI insights - Custom contracts & billing options | - 3 Userbrain testers included/month - Test with your own users - 15 collaborator seats - AI notes & clips - Automated test reports - AI insights - Custom contracts & billing options | - Custom tester packages - Test with your own users - Unlimited collaborator seats - AI notes & clips - Automated test reports - AI insights - Custom contracts & billing options |

Userbrain vs. Usertesting

The verdict: These two tools are very similar, with Userbrain edging ahead due to its pricing being slightly more accessible for smaller teams.

12. Hotjar by Contentsquare: Best for heatmaps and product behavior analytics

Hotjar is built for unmoderated, always-on behavior analytics (desktop, tablet, and mobile web) on live websites. It’s best known for visual behavior tracking through heatmaps, session replays, in-page feedback, and surveys, helping UX and product teams quickly spot where users click, scroll, hesitate, or abandon.

Pros

- Captures user journeys through session recordings

- Simplifies real-time feedback collection with on-page surveys and polls

- Ideal for spotting friction points or validating small changes without needing complex testing

Cons

- Expensive for larger teams or sites with high traffic

- Lacks advanced segmentation for user feedback analysis

- Some recordings miss interactions on modern or JS-heavy sites

- Missing many research methods, such as card sorting, tree testing, prototype testing, and more

What do Hotjar users say?

While users appreciate heatmaps and session recording features, some users like Gabor F., a Marketing Manager, note that “session recording limits can be restrictive, and filtering data could be smoother.” Shobhit A., a Technology Director, adds that “recordings can slow down page load speed and pricing gets high with scale.”

Pricing

Free | Growth | Pro | Enterprise |

|---|---|---|---|

$0 | $49/month | Custom pricing | Custom pricing |

- Up to 20k monthly sessions - Session Replay + unlimited heatmaps - Funnels - Standard filters - 7 integrations - Dashboards | - All Free features - Starts at 7k monthly sessions - Sense (AI engine) - 13 months of data access - Frustration score - Advanced filters - Impact Quantification - 9 integrations | - All Growth features - Starts at 1m monthly sessions - Journey Analysis - Zone-based heatmaps - Impact Quantification (enhanced) - 115+ integrations - Precision filtering (retroactive) | - All Pro features - Starts at 1m monthly sessions - Digital Experience Monitoring - User Lifecycle Extension - Error summaries - Data feeds - Unlimited projects (same business entity) - 3-month Session Replay retention |

Hotjar vs. UserTesting

The verdict: Hotjar is more affordable than UserTesting, and provides a greater selection of solutions for teams focused on web-based insights like heatmaps and session recordings. However, it lacks moderated testing, participant recruitment, and prototype testing capabilities, which are core strengths of UserTesting.If your focus is usability testing, stick with UserTesting or switch to a platform like Maze.

13. PlaybookUX: Best for remote usability and information architecture testing

PlaybookUX focuses on moderated and unmoderated user research, with tools like card sorting and tree testing. The platform also comes with built-in participant recruiting, video transcripts, and Figma prototype analytics. Its AI tools summarize transcripts, flag UX issues, draft screeners, and generate executive reports with next-step suggestions.

Pros

- Comprehensive research methods

- Allows users to tailor report dashboards to their needs

- Flexible pricing with pay-as-you-go and subscription options

Cons

- Some features are only available in higher-priced plans

- Offers limited tools for comparing current vs. previous tests

- Can be costly for startups or smaller teams that need more features

What do PlaybookUX users say?

Most public reviews of PlaybookUX date back to 2023, which makes it difficult to assess how the platform performs today.

Earlier users, like Karthikeyan P., express the need for in-tool charts for post-study analysis, like customer sentiment analysis. Another user, Matt W., shares the need for an initial overview of how to use the platform when users first sign in.

Overall, PlaybookUX appeared promising but somewhat under-developed, and without current reviews, it’s difficult to confirm whether these issues have been addressed.

Pricing

Pay as you go | Scale | Pro | Enterprise |

|---|---|---|---|

No subscription | $5,400/year | $8,800/year | Custom pricing |

- Unmoderated testing - Moderated interviews - Recruit consumers & professionals - Curated question templates | Everything in Pay as you go, plus: - Card sorting - Tree testing - Surveys - Preference, first-click, and five-second testing - Tagging & universal search - Test with your own customers - Segmentation - Live note-taking | Everything in Scale, plus: - Custom consent forms - Approval flow - Figma integration / Click testing - Invisible observers - Upload outside research - SSO & SAML - Session Replays (add-on) | Everything in Pro, plus: - Bulk pricing discounts & custom session limits - Dedicated CSM - Custom onboarding - Custom contracts & ToS - 99.99% SLA - Invoice payments - Unlimited seats |

PlaybookUX vs. UserTesting

The verdict: PlaybookUX offers a more flexible pricing model with pay-as-you-go options, making it accessible for smaller teams and projects. Choose PlaybookUX if you're a smaller team or new to user testing. However, if you need an enterprise-grade platform with a vast participant pool and advanced analytics capabilities, opt for another solution on our list.

Which is the best alternative to UserTesting?

There are plenty of UserTesting alternatives with everything you need to get incredible feedback without breaking the bank. For example, you can try Optimal Workshop for IA research, Dscout for diary-based research, or Hotjar and Sprig for gathering user feedback directly within your product.

However, if you’re looking for an end-to-end user research platform then Maze is the right choice for you.

Maze is a comprehensive user research platform that enables product teams to collect insights at the speed of product development. Beyond moderated and unmoderated testing, it offers AI-driven analysis and reporting, prototype testing with Figma Make, Loveable, and Bolt, mobile app and responsive testing, card sorting, tree testing, and survey tools, all in one workspace

The best bit? You can try Maze’s free-forever plan to find out if you like the platform before making your decision. No fees, no commitment, just a chance to find your way around the platform (and your users!).

Frequently asked questions about UserTesting alternatives

What are the best alternatives to UserTesting?

What are the best alternatives to UserTesting?

Here are the best user research alternatives to UserTesting:

- Maze

- Lookback

- Optimal Workshop

- Lyssna

- Loop11

- Userfeel

How much is UserTesting?

How much is UserTesting?

UserTesting does not publicly disclose its pricing. According to third-party benchmarks, most contracts land in the five-figure range, with a typical median of around $40,000 per year. On public forums, buyers mention quotes from $10,000 to $42,000, with older “unlimited” deals near $15,000 and per-seat pricing in the low thousands, showing just how much plans can vary by negotiation and usage level.

Why switch from UserTesting?

Why switch from UserTesting?

Users switch from UserTesting for three main reasons: unpredictable pricing that can exceed $40,000 per year, missing research capabilities, and inconsistent tester quality that affects research reliability.

How does Maze compare to UserTesting?

How does Maze compare to UserTesting?

Maze is more affordable, easier to scale, and comprehensive in terms of testing methods. UserTesting contracts typically sit around $40,000/year, use a credit system, and charge extra for seats, participants, and test volume, which limits who on your team can actually run research. Maze offers a free plan, and Enterprise plans offer unlimited seats and a full range of research methods.

Maze automatically analyzes results, provides usability metrics instantly, and supports a range of methods including AI-prototype validation via Figma Make, Bolt, Lovable, and Replit, plus native testing with Figma and Axure.