Just a couple of years after generative AI tools like ChatGPT hit the market, 58% of Product teams are already using AI tools in research. And for many, large language models (LLMs) have become a regular part of user research workflows.

But it’s a tricky tightrope to walk: seizing opportunities presented by technological advancements, without sacrificing the human touch that puts users at the heart of user experience.

Read on for our deep dive into human versus AI user research, from developments in AI moderators and synthetic users to the strengths and limitations of both human and AI-led research. Plus, the new hybrid research model that positions humans and AI not as competitors, but collaborators.

AI has fundamentally altered the playing field of product development. In a world where everyone can build fast, speed alone is no longer an advantage.

Jo Widawski

CEO & Co-founder at Maze

Share

The current landscape: AI’s role in user research

Today, we see a significant push-and-pull across the industry—organizations are looking to research more and build faster, so they turn to AI. But some researchers remain reluctant to adopt (let alone trust) machine learning.

This caution isn’t unfounded—artificial intelligence has a reputation for presenting false information, it’s hugely detrimental to the environment, and of course, there’s the fear that AI could make researchers obsolete. (Spoiler: it won’t. More on this later!)

While we’d be remiss to ignore these concerns, and the ethical considerations of implementing AI in user research practices, the benefits are undeniable. With intentional use and considered best practices, AI can be a truly powerful collaborator in the UX research process.

I’m excited to see how our roles will evolve alongside AI. I like to think that because of AI we're moving into roles which allow us to focus on higher visibility, higher-impact business decisions.

Daniel Soranzo

Lead User Researcher at GoodRX

Share

How can AI be used in research?

Since 2024, there’s been a 32% increase in adoption of AI in user research. With a wide array of AI research tools now available, the data shows teams lean on AI for a number of time-intensive tasks:

- Analyzing user research data (74%)

- Transcription (58%)

- Generating research questions (54%)

- Planning and drafting research studies (50%)

- Reporting (49%)

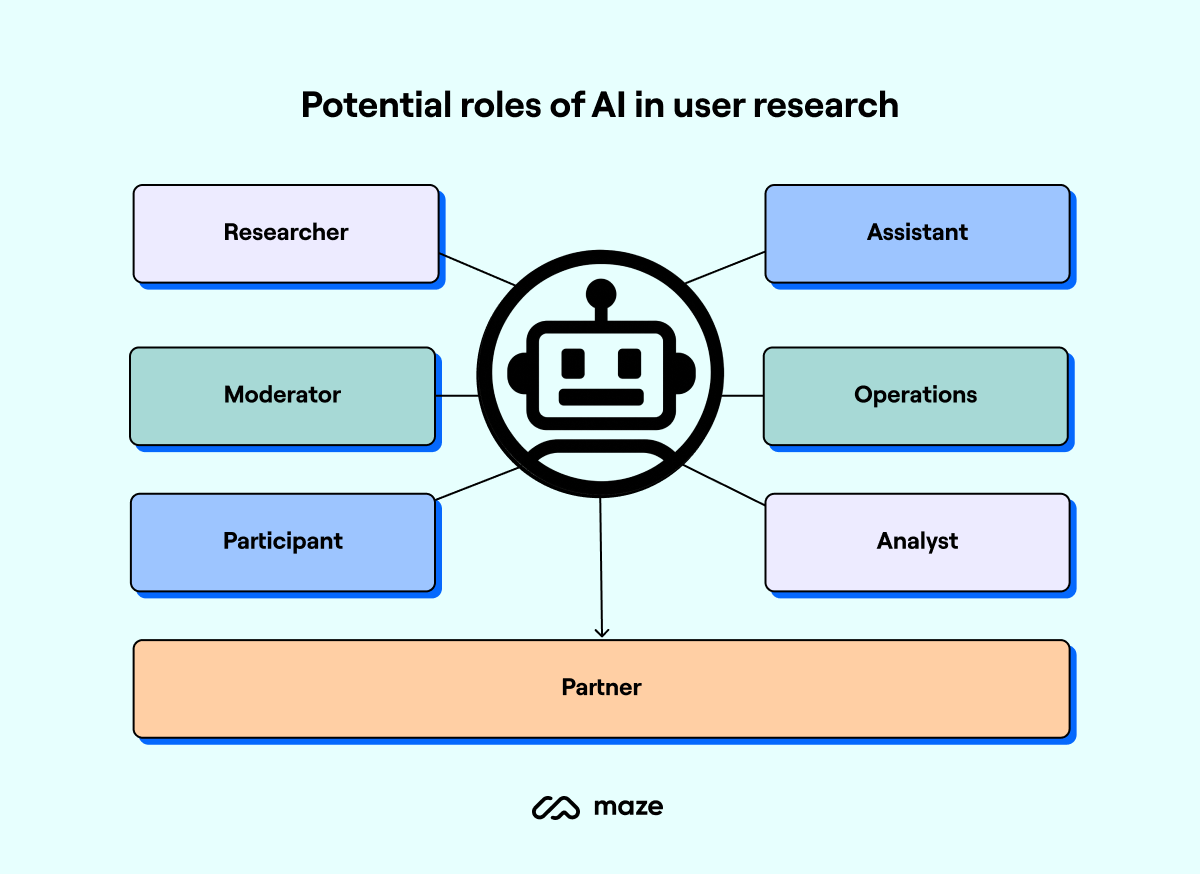

Currently, the majority of use cases firmly place AI as an assistant. While artificial intelligence is excellent at owning manual processes and freeing up researchers’ time for more in-depth research, it can also serve as a moderator conducting the research itself—or even participate in the studies as a user.

Here’s some key ways researchers can utilize AI throughout the UX research process:

- Product discovery and ideation: Build out user personas, conduct competitive analysis, market research, social listening, or analyze user behavior

- Recruitment and synthetic users: Recruit and screen research participants, or generate synthetic users

- Autonomous research execution: From generating research questions to moderating interviews and analyzing responses, AI can easily conduct moderated and unmoderated research solo

- Pattern recognition across large quantitative datasets: Rapidly analyze survey responses, product analytics, or website traffic to identify trends and predict future user behavior

- Qualitative research and analysis: Perform thematic analysis, co-run interviews, identify sentiment, and extract insights using voice and speech recognition

- Generative reporting and insight synthesis: Identify pain points, generate reports, create insight repositories—AI can become a virtual librarian, locating relevant data in seconds

AI as the participant: The rise of synthetic users

Barely a moment passed after the first wave of ChatGPT hype before LinkedIn feeds were flooded with hypotheses about AI-powered research participants. But what once seemed like a far-fetched sci-fi plot has rapidly become a viable tool for product teams.

The Nielsen Norman Group defines synthetic users as AI-generated profiles that mimic a specific user group and provide research findings based on that demographic—such as simulated thoughts, feelings, desires, and experiences.

Synthetic users are created by LLMs that have been trained on vast quantities of real people’s data. Tools like ChatGPT and other AI chatbots can create synthetic users, but there are also purpose-built tools and companies, like Synthetic Users, designed for this task.

Now, in theory, this sounds great, right?

The perks of synthetic users mean you can save time and money by:

- Generating AI users who will match your user persona every time

- Bypassing the challenges of participant recruitment

- Scaling user testing without needing more resources

- Minimizing the risk of human cognitive biases i.e. human users giving the answer they perceive as ‘the right one’ due to social desirability bias

However, using AI as research participants also has undeniable downsides:

- While human cognitive bias may not occur, AI is known to have ‘hallucinations’ and provide false information, even going so far as to deliberately falsify information to please the user or provide ‘correct’ answers (known as sycophancy)

- Identical perfect-persona users every time means teams may become blinkered to diversity, anomalies, or variance in their user base

- Humans are not perfect, but AI often is—synthetic users often out-perform real participants, providing inaccurate data

Above all, if we automate the moderator and the participants, will researchers risk falling out of touch with users?

AI-driven research: Benefits and limitations

AI has many strengths which makes it valuable as a researcher, or research assistant, but it also has some downsides. Let’s weigh them up.

Benefit #1: Speed and efficiency

The most appealing and undeniable perk of AI-driven research is the scale and speed with which it can conduct studies, process data, and analyze user research.

Maze’s Future of User Research report revealed that 58% of product teams cite improved team efficiency and 57% note faster turnaround times since adopting AI into UX research workflows. Use cases emphasize speeding up manual processes like screening research participants, generating reports or user interview transcripts.

With organizations needing to build fast and build right, this kind of research boost from AI can be the difference between a killer product launch, and being outpaced by the competition.

Benefit #2: Scalability and cost-effectiveness

Today, 63% of teams cite time and bandwidth constraints as a major challenge, with 42% referencing budget as their top blocker to conducting more research.

By using AI in UX research, organizations can optimize workflows and minimize overhead spend—scaling research programs without needing to invest in more team members (or burn out the existing team, as so often happens).

With 24/7 capabilities to run constant studies, and the capacity to analyze vast amounts of quantitative data at a time, AI can truly revolutionize the pace and depth of research.

It can tackle vast amounts of quantitative data from web traffic or product analytics in a few moments, boosting analysis depth and scale.

What used to take me weeks now takes half the time. If my company were to get rid of AI, I'd worry about how challenging it would be to accomplish the same tasks in the same amount of time.

Daniel Soranzo

Lead UX Researcher at GoodRX

Share

Benefit #3: Reducing human errors and bias

Another considerable perk of introducing AI is the reduction of human errors and bias.

As long as research has existed, it’s been plagued by very human problems: mistakes, mishaps, and pesky cognitive biases that worm their way in to influence decisions.

While AI has its own challenges with bias, there’s much to be said for minimizing the risk of typical human errors. For example, having AI check your set of user interview questions to see if they contain leading questions or easy-to-miss grammatical errors.

Limitation #1: Need for human oversight

While AI is helping teams increase output, there are limitations.

Many teams find the need for human review is a top concern (74%), with the time needed for review nearly outweighing the productivity gains.

Of course, you can implement and ignore, but for the majority of teams carefully adopting AI, we can’t ignore the additional work that comes with reviewing AI outputs and manually overseeing its work.

Limitation #2: Technical gaps and built-in bias

Alongside concerns of reliability, there are technical limitations to AI’s capabilities: numerous research papers have found AI tools have limited emotional intelligence and struggle with nuanced cultural and contextual understanding—qualities which are vital to qualitative research.

As Kathy Garcia, Researcher in Computational Cognitive Science at John Hopkins University explains, “Understanding the relationships, context, and dynamics of social interactions is the next step [for AI tools], and research suggests there might be a blind spot in AI model development."

Researchers must consider the risk of reinforcing existing biases from an AI’s training data—meaning, if an LLM has been fed biased data (either from existing misinformed or biased data on the web, or training data built by humans with biases), then the AI will inherently carry this bias with it into research.

Limitation #3: Ethical and trust concerns

Worries about trust and credibility (67%) and ethics and privacy (39%) are also top-of-mind for researchers.

Geordie Graham, Senior Manager of User Research at Simplii Financial, emphasizes that the responsibility for ethical use of AI sits on the shoulders of researchers: “I think best practices will have to evolve in the same way we've developed best practices in participant management, ensuring we don't use AI in an unethical way or share misleading data.”

There are a number of examples of AI being untrustworthy, from experiencing ‘hallucinations’, to providing misinformation, or even deliberate misdirection (‘sycophancy’). How AI companies use the data fed to AI tools also remains a murky legal area.

It's the wild west. There are no clear guidelines on how to use AI or when it's acceptable to use it.

Geordie Graham

Senior Manager at User Research at Simplii Financial

Share

Despite these concerns, Geordie emphasizes the value of AI: “It can be a really important tool in increasing and augmenting human capacity.”

Human-driven research: Capabilities and constraints

Human researchers are an organization’s biggest muscle. Like the human heart, they pump user insights around the product ecosystem to fuel product decisions and business progress.

And their success can’t be denied—companies that utilize researchers and user insights to inform business strategy report 2.7x better outcomes (including 5x better brand perception, 3.6x more active users, and 3.2x better product-market fit).

But just like AI, human researchers have to balance strengths and weaknesses. Let’s take a closer look.

Strength #1: Emotional intelligence and contextual awareness

While AI excels at pattern recognition and reading datasets, humans outperform even the best LLM in their ability to predict human behavior or anticipate feelings.

It's not enough to just see an image and recognize objects and faces. Real life isn't static. We need AI to understand the story that is unfolding in a scene.

Kathy Garcia

Researcher in Computational Cognitive Science at John Hopkins University

Share

Being able to effectively understand nuanced social and cultural context is critical for a number of qualitative research methods like user interviews, focus groups, field studies and diary research.

But AI struggles to react appropriately, adapt in social situations, and ‘read the room’—all stemming from an inherently human ability to feel empathy.

Strength #2: Flexibility in unpredictable scenarios

Similarly, AI struggles to pivot in unpredictable environments.

As researchers at The University of Cambridge Business School found, “While AI can rapidly learn and iterate in a controlled environment, it’s less ideal for coping with disruptive events that require human intuition and foresight.”

Factoring in AI’s struggles with bending the truth, this lends itself to AI fabricating responses from participants or assuming patterns based on static predictions—rather than the reality (which may be surprising).

Many types of research happen outside of a controlled environment, are looking to test assumptions, or trying to ‘break the product’.

Where AI finds it difficult to create truly novel or disruptive concepts, people excel in creative problem-solving. Meaning for certain use cases, human flexibility and skills to adapt to evolving scenarios still make them best-placed for this task.

Strength #3: Self-awareness

Finally, let’s talk about cognitive biases.

Although we know both AI systems and human researchers can develop biases, the distinction comes from human researchers’ self-awareness: their capacity to acknowledge and confront these biases.

The American Psychological Association highlights that through increased awareness and structured decision-making, humans can mitigate their own biases. AI, however, lacks the self-awareness to autonomously identify and correct its own bias.

AI is good at describing the world as it is today with all of its biases, but it does not know how the world should be.

Joanne Chen

Partner at Foundation Capital

Share

Ultimately, when breaking down what sets human researchers apart from their AI counterparts, the traits boil down to one common denominator: a researcher’s innate humanity.

Something which AI cannot, and will never, be able to imitate.

Limitations of human-led research—and how AI can help

While human researchers bring depth, empathy, and creativity, their efforts are often impeded by structural and cognitive limitations that can reduce impact and slow progress in modern research workflows, such as:

- Research fatigue and human error

- Influence of cognitive biases

- Limited capacity for large-scale data analysis

- Inconsistency between researchers

- Time and resource constraints

- Challenges scaling research operations

Above all, 63% of teams cite time and bandwidth constraints as a major challenge, with 42% referencing budget as their top blocker to conducting UX research.

A number of these difficulties lend themselves to being solved by AI—human error, time constraints, limitations with user research analysis. Yet it seems the biggest limitations lie not in human ability, but in organizational restrictions.

So while AI is poised to help with these challenges, it begs a bigger question: what would happen if AI and human researchers united to evangelize the user research function?

When to use AI automation vs. human insight for user research

With AI now able to take the role of a researcher (moderator or analyst) or a user, we’re left with a number of distinct setups that let artificial and human intelligence work together to uncover deeper user insights and make better decisions, faster.

Every configuration offers benefits and downsides, with specific use-cases for each.

Human-centered research: When empathy and context are critical

Starting with the status quo: a human researcher and human user puts empathy and emotional intelligence at the forefront, emphasizing user-centered design.

Best for | Key strengths | Limitations |

|---|---|---|

|

|

|

When to use: For high-sensitivity research, and use cases when humanity and empathy should be at the forefront.

The biggest benefit of human-led user research is its flexibility and empathy. Humans still beat out AI when it comes to displaying emotional awareness and understanding social context, so this model is one to opt for if you’re dealing with human-centered products or delicate subject matters, such as mental health or diversity and inclusion.

AI-first research: When speed and scale matter most

AI-first research could look like AI moderators conducting user interviews with human participants, or even AI researchers interviewing synthetic users.

Best for | Key strengths | Limitations |

|---|---|---|

|

|

|

When to use: When you need to gather and analyze a lot of user data very quickly. Ideal for large-scale quantitative research or early product discovery and competitive research.

While it may seem unlikely right now, it’s not unrealistic to look towards a time when products are made, researched, and tested solely by AI.

Using AI researchers with AI participants could be a valuable option for early product discovery—when you’re just starting out and need to understand the market or competitive landscape quickly, or generate a lot of ideas fast for jumping-off points.

It’s important to remember that AI is a piece of technology. And a piece of technology is neither a problem nor a solution. It’s like a Lego block, right?

Jo Widawski

CEO & Co-founder at Maze

Share

As Jo says, AI is a tool. It is what we do with it. So while we’d recommend fact-checking and refining any research or ideas, using AI researchers or users (or both!) early on in the product design process is ideal for unblocking creativity or conducting fast-and-dirty research.

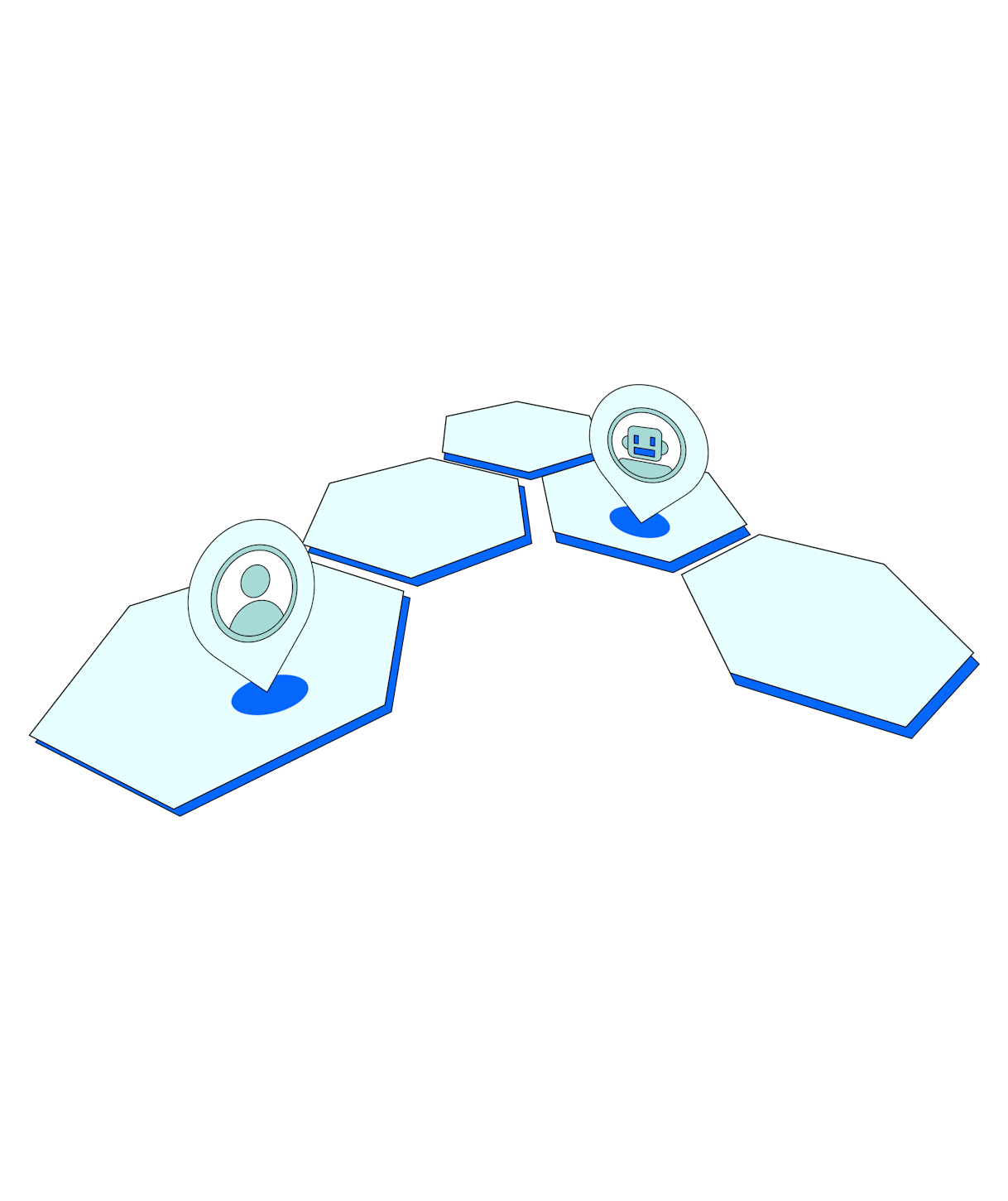

The hybrid sweet spot: Collaborative intelligence

Finally, this configuration benefits from the thoroughness and experience of human brain power, coupled with the scalability and speed of AI.

This setup might feature human and AI researchers working in tandem, AI conducting studies with a human review, or human researchers taking the lead then handing off to AI for analysis.

Best for | Key strengths | Limitations |

|---|---|---|

|

|

|

When to use: For analyzing vast data sets (quantitative or qualitative), conducting follow-up or additional research, or gathering first-pass data in a large volume, such as usability testing or product discovery.

The use cases for AI-human research collaboration are endless. From gathering large volumes of data during usability testing to autonomously conducting secondary research, AI researchers offer significant value—especially when used as a collaborator or assistant to human researchers.

They can act as a co-pilot; take on the manual or time-intensive work, speed through analysis, and free up time for you to focus on deeper, strategic tasks.

Using AI to analyze vast amounts of data, identify patterns, and generate insights at scale is a game-changer. By automating these formerly laborious tasks, we free up headspace and bandwidth to go deeper into areas where only our human brain can make magic happen.

Cheryl Couris

Senior Design Director at Cisco

Share

The key here is to use AI research tools that you trust, from organizations that offer transparent guidelines and emphasize research quality and scalability. It’s ideal if the tool offers varied capabilities, from analysis or question-writing to running user interviews, so you can customize it to your needs.

Scale user insights in tandem with product development

Generate more insights in less time, with less work with Maze AI.

You can also utilize AI users to maximize the breadth of your study. This combination is particularly effective if you’re using synthetic users to complement human user data (either new, or pre-existing from your UX research repository). For example, if you need to re-run the same study with a new prototype, or want to conduct a pilot test before kicking off research with real users.

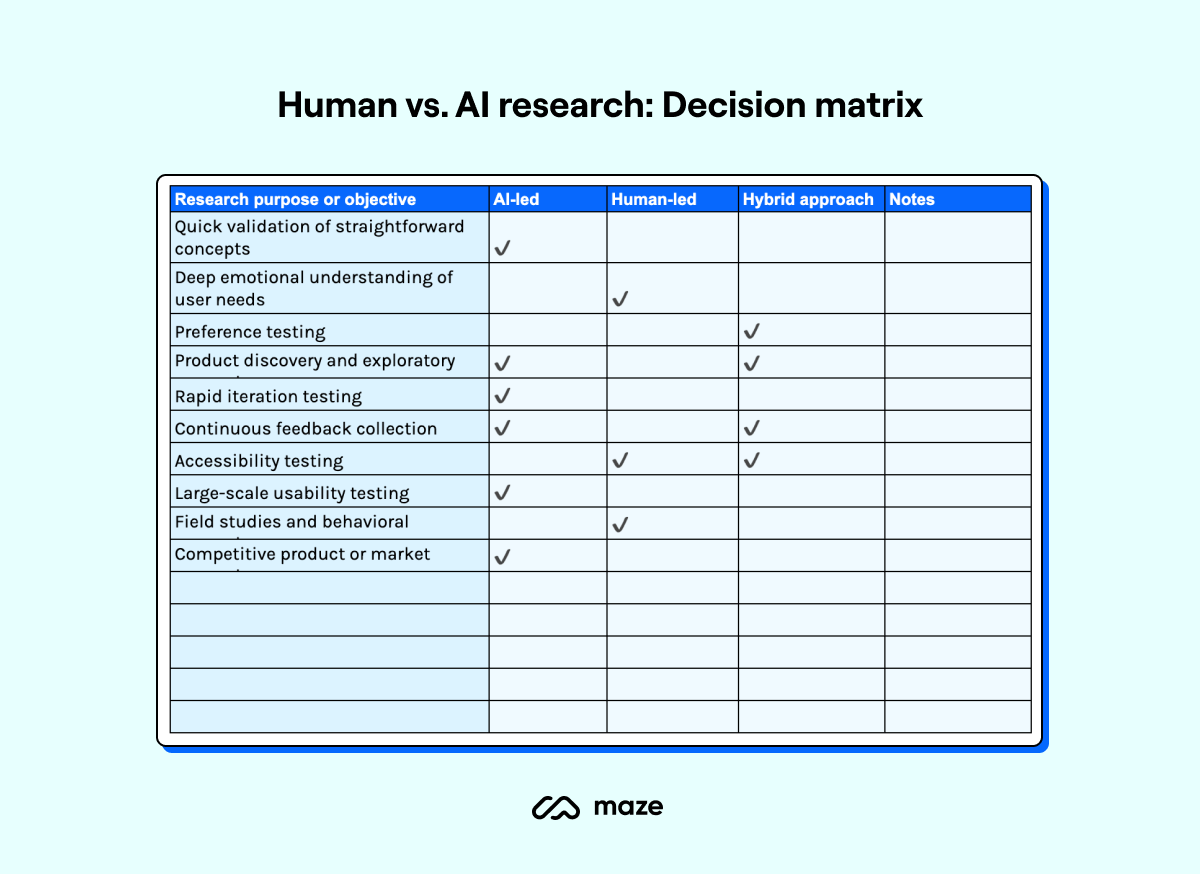

Decision framework: When to use AI vs. human research

If you’re still unsure when to use AI vs. human research, we’ve got just the thing.

This handy decision matrix table offers an at-a-glance look on when to use AI-led research versus human research—or a hybrid approach.

Bear in mind this is an inexhaustive list, and there’s no hard-and-fast rule on when to use (or not use) AI. Every team and each research project is different. There will be outliers to this framework, so consider what works for your team, and what fits into your workflows.

As Behzod Sirjani, Founder of Yet Another Studio, says: “This moment in time is an opportunity for us to really rethink what it is that we do, and how we show up in doing that really well.”

You can also download a copy of the matrix to fill in with your own use cases to better guide team research decisions.

The hybrid research model: An example of collaborative intelligence

Ultimately, product teams and researchers need to reframe the entire discussion away from an AI versus humans conversation, and towards one of collaborative intelligence.

How can human researchers work with AI to achieve superior results? How can AI be bettered by human input, and vice versa? Where does AI excel where humans cannot, and where is human intuition irreplaceable?

The future of user research isn’t about replacing researchers with AI, it’s about creating partnerships between AI and humans, where each one feeds into the other.

On my team, we see AI as a co-pilot, not a replacement—using AI to augment research has helped us do more, faster.

Cheryl Couris

Senior Design Director at Cisco

Share

Product teams need to grow collaborative intelligence systems—workflows where AI and human researchers enhance the other’s capabilities. Where synthetic users and human testers complement each other’s user insights.

For example, say your team is looking to understand what friction your users experience while onboarding. You identify user interviews as an ideal method, but you’re also juggling two other ongoing studies and need this data by next week.

An example AI-human complementary workflow might look like:

- Plan: Human researchers outline a UX research plan, define hypotheses and research study structure

- They brief AI research co-pilots on goals, methodology, and best practices

- Recruit: AI recruitment tools identify existing users and recruit research participants who match the user persona and export a list for you to contact

- Using a tool like Maze Reach or In-Product Prompts, you can quickly recruit users

- Research: AI moderators conduct the research with a mix of human research participants for primary data, and synthetic users for a large scattering of complementary data

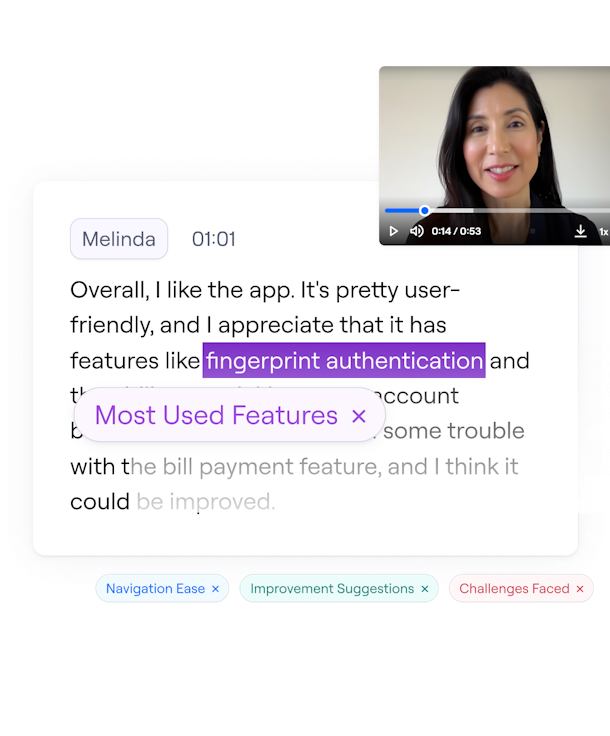

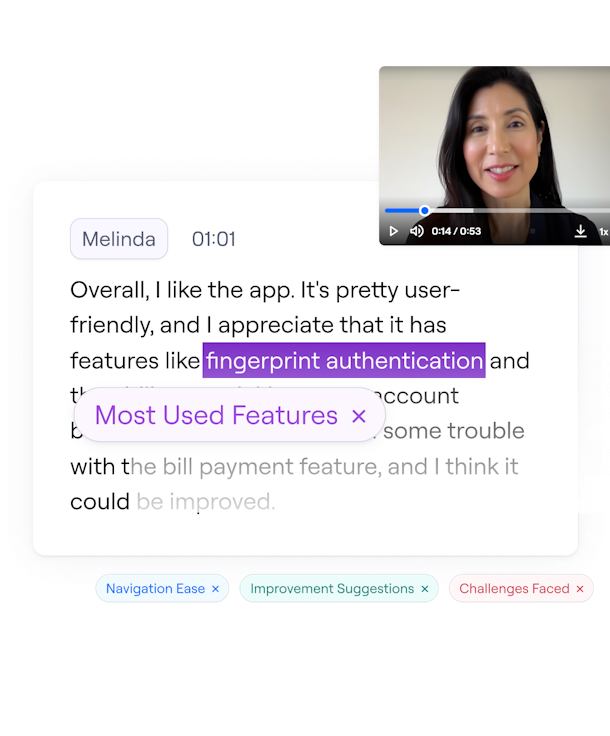

- Analyze: AI performs thematic analysis on the data and produces a report for human consumption

- Refine: Human researchers review the data, refine, and add contextual understanding

- Synthesize: Human and AI work together in parallel processing to define next steps

- AI conducts further data synthesis with secondary research

- Human researchers dig into user interview data and revisit recordings for nuanced insights

- Report: Given all the info and full access to session recordings, internal product roadmaps, and research repositories, AI pulls together a UX research report with key takeaways, video soundbites, and human-informed product decisions

- The research team present their findings and plan how to implement changes

This is just one example of what an AI-human research workflow could look like. When systems like these are intentionally designed, they’ll allow researchers to operate at their highest cognitive level, while AI handles tasks that could cause cognitive overload.

The most forward-thinking organizations aren’t just using AI to do research faster—they’re using it to fundamentally transform how research works across the product lifecycle. This change allows AI to dynamically handle routine research and data analysis, while guiding human researchers towards more ambiguous or emotionally-complex aspects of user research.

This isn’t about division of labor, it’s about creating a new research ecosystem. One that is self-sufficient, and maximizes capabilities that neither human nor AI could achieve alone.

The future belongs to those who can harness the power of user insights and AI to deeply understand and respond to ever-changing user needs.

Jo Widawski

CEO & Co-founder at Maze

Share

Evolution, not revolution: AI won’t replace researchers

Even after reading this, you might still be wondering: is AI going to (eventually) completely replace human researchers?

The short answer is no, it won’t.

But really, the question isn’t whether AI will replace researchers—it’s how researchers who use AI effectively will replace those who don’t.

The companies who shoot to success won’t be those choosing between AI or human research, they’ll be the ones strategically combining both, based on research objectives and resources.

The future remains to be seen. We can shape it through our usage—what we decide to adopt, what we regularly use, and how we critique it [AI].

David Hartley-Simon

Research Lead at Rippling

Share

As Daniel puts it, “AI is the intern, not the subject matter expert,”. While AI—like the best interns—will eventually match human researchers in skills, or even surpass them, it cannot mimic human nuance and emotional intelligence.

There will always be a place for humanity in user research, even if the role of the researcher is fluid.

While AI can speed up repetitive tasks, the human touch will remain essential. The winners will be those who can harness the power of AI and get creative with how to expand the value of UX research.

Bryanne Peterson

Global Head of Research & Strategy at Broadcom

Share

Researchers need to adapt to the new ecosystem of collaborative intelligence. Where human execution takes a backseat, AI serves as an orchestrator for research activities, and human intuition evolves to guide the strategy.

So what’s the big takeaway?

Regardless of whether you rush to test AI researchers, or reluctantly watch and wait, the future is coming.

And in the future of user research, the most powerful user insights won’t be from AI or humans alone—they’ll emerge from thoughtful partnerships between the two, via collaborative interfaces designed for a new human-AI research model.

Start building a collaborative intelligence system

Scale user insights at the speed of product development with a suite of AI-powered features from Maze

Frequently asked questions about human vs. AI user research

Can AI do user research?

Can AI do user research?

Yes, AI can conduct certain aspects of user research through tools that analyze user behavior patterns, process survey responses, and generate insights from large datasets. However, AI-powered research works best as a complement to human-led research rather than a complete replacement, especially for capturing nuanced emotional responses and contextual understanding.

How to distinguish AI vs. human research?

How to distinguish AI vs. human research?

Distinguishing AI from human research comes down to several factors: humans excel at empathy, contextual understanding, and picking up on subtle emotional cues, while AI excels at processing large datasets, identifying patterns, and eliminating bias in data collection. Human researchers can adapt their approach mid-interview, while AI follows predetermined patterns. The most reliable research combines both approaches.

Will AI replace humans in user research?

Will AI replace humans in user research?

AI will not completely replace humans in user research but will transform their role. While AI is increasingly handling quantitative analysis, data processing, and pattern recognition, human researchers remain essential for strategic decision-making, empathetic understanding, ethical considerations, and contextual interpretation of insights. The future lies in human-AI collaboration rather than replacement.

How will AI impact the future of UX design and research?

How will AI impact the future of UX design and research?

AI will democratize access to user insights by making research faster and more affordable, enabling continuous testing throughout product development. It will augment human researchers by handling routine analyses while allowing them to focus on strategic work. The future will see more specialized AI tools for specific research methods, plus enhanced visualization capabilities that make insights more accessible to entire product teams.

How do AI-driven tools compare to human researchers in understanding user behavior?

How do AI-driven tools compare to human researchers in understanding user behavior?

AI-driven tools excel at processing vast datasets, identifying patterns, and eliminating certain biases, but they struggle with understanding contextual nuances and emotional subtleties. Human researchers bring empathy, adaptability, and intuitive understanding that AI cannot replicate. The most effective approach combines AI's computational power with human interpretive abilities—AI can tell you what users are doing, while humans are better at understanding why they're doing it.